AI Agents vs. MCP Servers: The Brains and the Bridge of Modern AI

If you've tried building an AI application that does more than just chat, you've probably hit the integration wall. Your AI agent—the "brain" of your app—is smart, but it's stuck in a box. To do anything useful, like update a Salesforce record or create a Jira ticket, it needs to connect to the outside world.

For years, that meant writing custom, brittle API integration code for every single tool. It's slow, a pain to maintain, and a security nightmare.

This is the problem the Model Context Protocol (MCP) was built to solve. Think of MCP as a universal translator for AI. It's an open standard that creates a secure and standardized "bridge" between your AI agent and the external tools it needs to use. It replaces a tangled mess of custom code with a single, clean protocol.

This article breaks down the essential relationship between AI agents (the brains) and MCP servers (the bridge). We’ll cover what each does, how they depend on each other, and what to look for in a great MCP server.

The AI Agent: Your App's Autonomous Brain

An AI agent isn't just a passive chatbot waiting for a prompt. It’s an active problem-solver. You give it a high-level goal—"Summarize our new customer sign-ups from this week and post the results to the #sales Slack channel"—and it independently figures out the necessary steps and tools to get the job done.

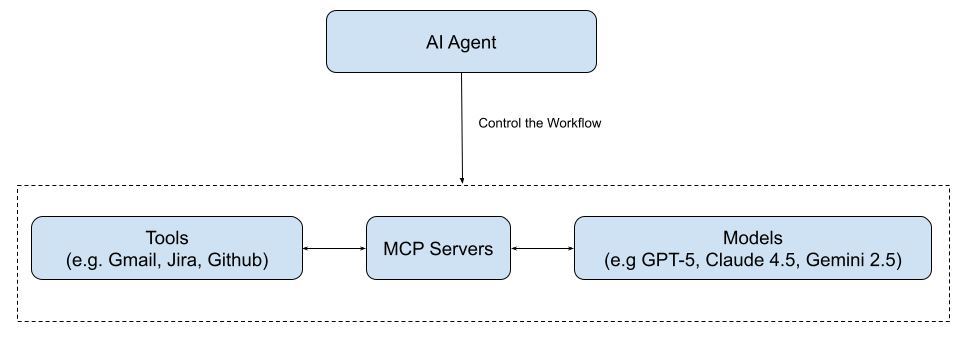

An agent’s core components are:

- A reasoning engine: Usually a powerful LLM like Claude, Llama, or GPT.

- A set of tools: The external functions it can use, like APIs or database queries.

- An orchestration loop: The logic that guides its planning and decision-making.

But an agent is only as good as the tools it can access. Giving it that access is where things get complicated.

MCP Servers: The Universal Bridge for Tools

A Model Context Protocol (MCP) server is a small, specialized server that acts as a secure gateway to an external tool. Instead of your agent needing to understand the unique quirks of the GitHub API, it only needs to know how to talk to the GitHub MCP server using one standard language.

The standard was introduced by Anthropic in late 2024 and is now being adopted across the industry. The architecture is simple: the AI agent is the client, and it communicates with one or more MCP servers that represent its tools.

This design cleanly separates the agent's reasoning from the messy details of tool integration. The MCP server handles the dirty work—authentication, API-specific logic, data formatting—so the agent can focus on thinking.

AI Agent vs. MCP Server: Brains and Brawn

To be clear: agents and MCP servers aren't competing technologies. They're two parts of a whole. The agent is the decision-maker, and the MCP server provides the capabilities.

| Aspect | AI Agent (The Brain) | MCP Server (The Bridge) |

|---|---|---|

| Role | Reasons, plans, and decides what to do next. | Securely exposes the functions of a specific tool. |

| Function | "I need to create a new pull request on GitHub." | Provides a create_pull_request function and handles the authenticated API call. |

| Analogy | A project manager who plans the work. | A specialized contractor who executes a task perfectly. |

An AI agent becomes truly powerful when it has a rich set of MCP servers to call upon, letting it orchestrate workflows across dozens of different applications.

What Makes a Great MCP Server?

The MCP protocol provides the blueprint, but the implementation is what matters. A production-ready MCP server needs more than just a basic API wrapper.

Key Qualities to Look For:

- Handles Authentication Securely: It should use standards like OAuth 2.0 to manage user credentials without ever exposing them to the LLM. This is a must-have for any serious application.

- Fast and Scalable: A good server is built for speed and can handle many concurrent requests, keeping your AI application responsive.

- Provides Clear Tool Definitions: The server must describe its tools with rich metadata so the agent knows exactly what a function does, what parameters it needs, and when to use it. This drastically reduces errors.

- Easy to Deploy and Manage: Whether you self-host with Docker or use with existing MCP clients or AI agents(e.g. ChatGPT, Claude, etc.), the server should be straightforward to set up and maintain.

Solving "Tool Overload" with Progressive Discovery

One of the toughest problems in AI is "tool overload." An agent with access to thousands of functions often gets paralyzed by choice.

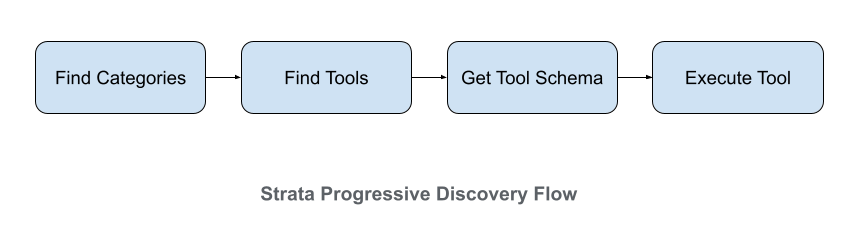

This is where smart MCP server design makes all the difference. A great example is Klavis AI's Strata, an open-source MCP server built to solve this exact problem. Instead of dumping every possible tool on the agent at once, Strata uses a progressive discovery approach.

Here’s how it works:

- High-Level Intent: The agent signals its goal, like "manage a GitHub repository."

- Navigate Categories: Strata shows the agent the main categories available:

Issues,Pull Requests,Actions. - Select a Specific Action: Once the agent picks a category, Strata reveals just the relevant actions, like

list_pull_requestsorcreate_pull_request. - Execute with Precision: Only then does Strata provide the detailed API parameters, ensuring the call is efficient and correct.

This guided process is how a human would use a complex app. It dramatically improves performance. On industry benchmarks, this method has been shown to outperform official MCP on complex workflows, allowing a single server to expose thousands of tools without confusing the agent.

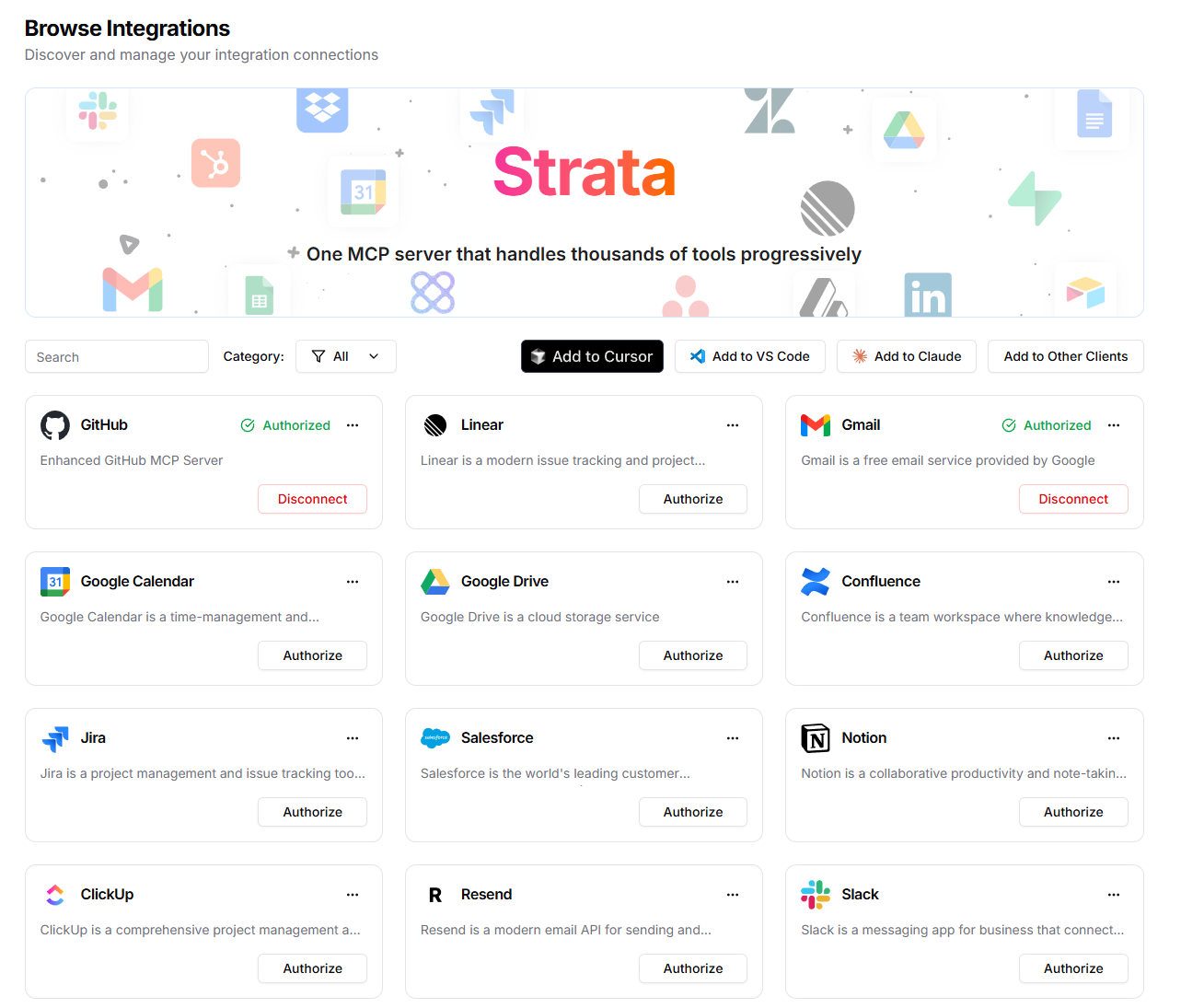

Klavis Strata MCP is also easy to install and use. You can get started with a few clicks or a few lines of code with API integration: Strata MCP documentation. Below is an example of how to get Strata MCP with the UI:

Conclusion: Give Your Brain a Bridge

AI agents and MCP servers are the foundation of the next generation of software. One can't function effectively without the other.

- AI Agents are the brains, providing the reasoning to tackle complex problems.

- MCP Servers are the bridge, giving those brains a secure and reliable connection to the real world.

For developers, this new stack is a game-changer. By pairing a powerful agent with a well-designed MCP infrastructure, you can finally build the capable, intelligent applications you've envisioned.

Frequently Asked Questions (FAQs)

1. How is an MCP server different from a regular API? A traditional API is a general-purpose door into an application. An MCP server is a specialized door built specifically for AI agents. It provides a standard format, rich metadata for tools, and a centralized security model that drastically simplifies the work of integrating new tools.

2. Can I build my own MCP server? Absolutely. The protocol is open, and SDKs exist for languages like Python and TypeScript. You can build servers for your company's internal tools or other custom data sources. For common platforms, however, using a production-ready solution will save you time and headaches.

3. Do you need an AI agent to use an MCP server? While MCP is designed for AI agents, any program can act as an MCP client. But the real magic happens when you connect it to an LLM that can autonomously decide which tools to use and when.

4. How does MCP handle security? A good MCP server acts like a vault. It securely stores API keys and manages OAuth tokens on the server side, so they are never exposed to the LLM or client application. This gives you fine-grained control over what your AI is allowed to do.