IDC predicts that by 2027, 75% of enterprises will adopt a hybrid approach to optimize AI workload placement, cost, and performance. For Model Context Protocol (MCP) deployments specifically, the choice between cloud and on-premises infrastructure isn't just about where servers live—it's about how you architect trust, control, and reliability into your AI systems.

This article examines the technical architecture and business considerations behind on-premises MCP deployments, with particular attention to the security and access control requirements that enterprise AI applications demand. We'll explore how companies like Klavis AI are building on-premises solutions that combine the standardization benefits of MCP with the security guarantees that regulated industries require.

The Enterprise MCP Deployment Challenge

Model Context Protocol has fundamentally changed how AI applications connect to external tools and data sources. Rather than building custom integrations for every service your AI agents need to access, MCP provides a standardized client-server architecture where AI applications (MCP clients) communicate with external capabilities through MCP servers.

The protocol itself is elegant in its simplicity. But deploying MCP infrastructure at enterprise scale introduces complexities that hobbyist implementations never encounter:

- Multi-tenancy authentication: How do you ensure that different users can only access their own data through shared MCP servers?

- Audit and compliance: Who accessed what data, when, and why—with immutable logs that satisfy regulators?

- Performance at scale: Can your infrastructure handle hundreds of AI agents making thousands of tool calls per hour?

- Security boundaries: How do you prevent one compromised agent from accessing data across your entire organization?

These aren't theoretical concerns. More than six months after MCP's debut in late 2024, enterprise adoption remains apprehensive, with CTOs and IT leaders facing the tough reality of moving from proof-of-concept to enterprise deployment.

Architectural Components of Enterprise On-Premises MCP Systems

Before we dive into the specifics of the architecture, it's important to the on prem should be very easy to deploy and manage. Klavis AI provides an on-premises MCP solution that can be set up with minimal configuration, allowing enterprises to quickly get started while still benefiting from robust security and access control features. Below is a quick demo.

* Short demo of Klavis AI on-prem deployment

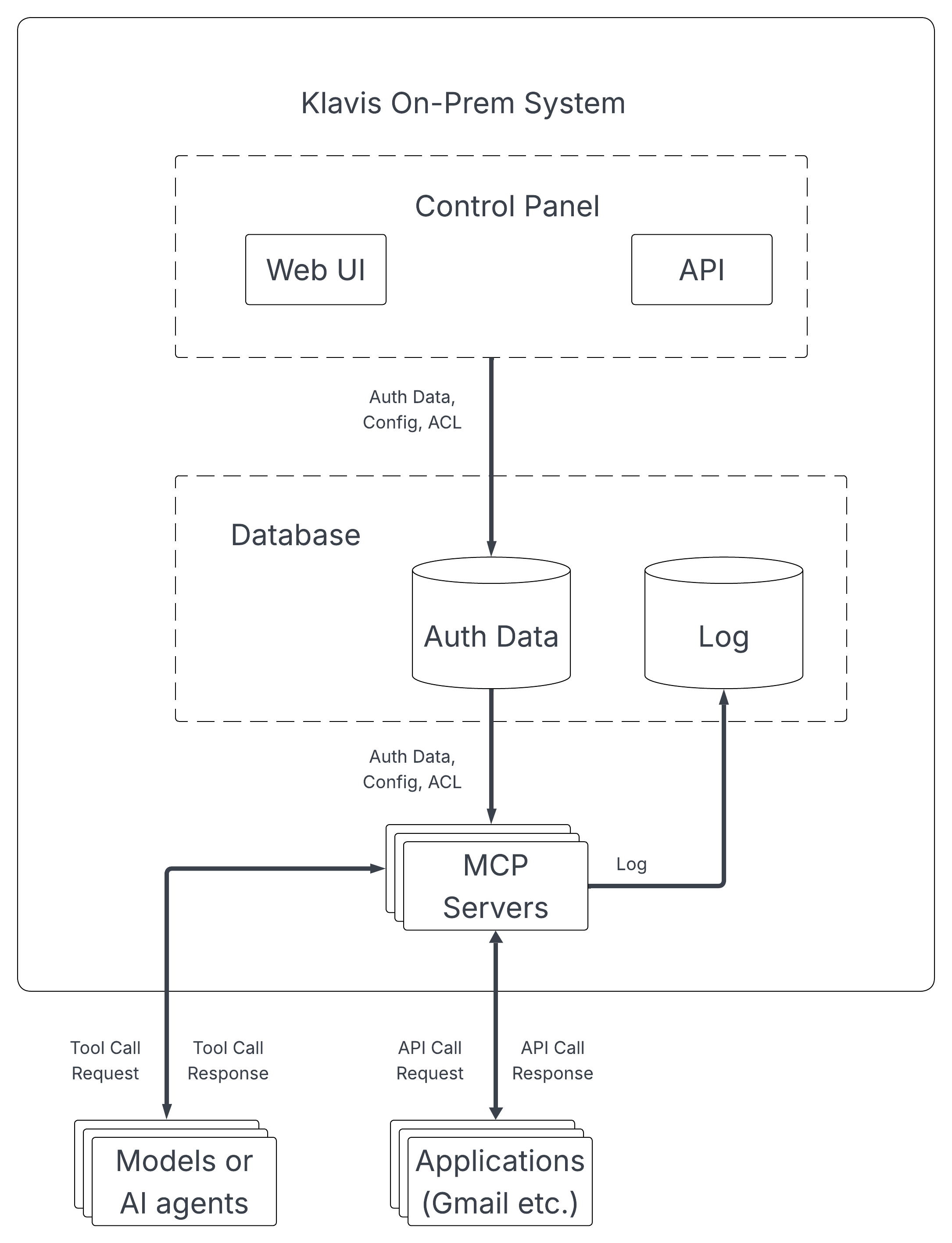

An enterprise-grade on-premises MCP deployment requires several integrated components working together to provide secure, scalable, and auditable AI tool access. Klavis AI's on-premises architecture provides a clear blueprint for how these components fit together.

Control Panel: Web UI and API Layer

The control panel serves as the administrative interface for your MCP infrastructure. This includes both a web-based user interface and a programmatic API that IT administrators use to:

- Configure which MCP servers are available to which users or teams

- Manage OAuth credentials and API tokens for external service integrations

- Monitor system health, performance metrics, and error rates

- Review audit logs and access patterns

The web UI makes day-to-day operations accessible to non-technical stakeholders, while the API enables automation and integration with existing IT management tools. This dual-interface approach means security teams can quickly adjust permissions through the UI during incidents, while DevOps teams can script routine administrative tasks.

Database Layer: Authentication and Logging

The database component handles two critical functions in Klavis AI's architecture:

Authentication Data Storage: This database securely stores user credentials, OAuth tokens, API keys, and permission mappings. When an AI agent attempts to use an MCP server, the system validates the request against this database to ensure the user has appropriate permissions.

Comprehensive Logging: Every MCP tool call, response, error, and access attempt gets recorded with timestamps, user identifiers, and request details. This creates the audit trail that compliance teams need and helps security teams identify suspicious patterns.

This separation of authentication data and logs follows security best practices by isolating critical credential information from high-volume operational data. The architecture diagram shows how authentication data flows from the control panel through the database to the MCP servers, while logs flow back from the servers to the database for centralized storage.

MCP Server Layer: Tool Execution Environment

The MCP server layer contains the actual servers that expose tools to AI applications. These might include database query servers, email servers, CRM integration servers, or file system servers that allow AI agents to access documents and knowledge bases.

Each MCP server operates as an independent process that communicates with the control panel for authentication and sends execution logs to the logging database. This architectural separation means individual servers can be updated, scaled, or replaced without disrupting the entire system.

In Klavis AI's on-premises deployment, MCP servers retrieve authentication data from the central database before executing tool calls. This ensures that even if a single MCP server is compromised, the attacker gains access only to the narrow set of capabilities that specific server provides—not credentials for other services.

Integration Points: AI Agents and External Applications

The on-premises MCP system connects to two external components:

AI Models and Agents: These are the LLM-powered applications (whether running locally or in the cloud) that act as MCP clients. They send tool call requests to your on-premises MCP servers and receive responses containing the data or actions they requested.

External Applications: These are the actual services your MCP servers integrate with—Gmail, Slack, Salesforce, internal databases, or proprietary APIs. MCP servers act as secure proxies that validate requests before forwarding them to these external systems.

This architecture creates clear security boundaries. Your AI agents never receive raw credentials for external services—they only interact with MCP servers that enforce access policies. External services only see requests from your MCP servers, not from potentially untrusted AI models.

Role-Based Access Control: The Security Foundation

One crucial component of enterprise MCP deployments is role-based access control (RBAC). Without granular permission management, MCP servers become security vulnerabilities rather than productivity tools.

Implementing RBAC in On-Premises MCP Deployments

An effective RBAC system for MCP infrastructure includes several layers:

User and Role Definition: Organizations define roles that correspond to job functions—customer support agents, sales representatives, engineering teams, executives. Each role receives a permission set that specifies which MCP servers and which specific tools within those servers they can access.

Identity Management: This is critical for system recognition and credentials like API keys or OAuth tokens for service authentication, enabling better management, trust, and lifecycle control.

Klavis AI's on-premises deployment includes a dedicated role-based access control panel that makes this functionality accessible to administrators without requiring custom code. Security teams can define permissions visually, review access patterns through dashboards, and modify policies as organizational needs change.

Security Advantages of On-Premises MCP Deployments

Reduced Attack Surface

Cloud-based MCP deployments expose your infrastructure to internet-facing threats. While cloud providers implement extensive security measures, the fundamental architecture requires that your MCP servers be accessible over public networks.

On-premises deployments eliminate many of these vectors by keeping MCP infrastructure behind corporate firewalls. External attackers must first breach your network perimeter before they can even attempt to interact with MCP servers—a significantly higher barrier.

Data Residency and Control

On-premises infrastructure offers complete control over where data is stored, how it's processed, and who can access it physically. All storage and processing remain within the organization's own network perimeter. For organizations in healthcare, finance, government, or other regulated sectors, this control isn't optional—it's mandated by compliance requirements.

Customization and Integration

On-premises deployments offer unrestricted ability to customize MCP server implementations to match your specific security requirements, integrate with existing identity providers, and optimize for your particular workload patterns. You control the entire stack from hardware to application layer.

This flexibility proves particularly valuable when integrating MCP infrastructure with existing enterprise systems. Rather than adapting your security policies to match cloud provider limitations, you can implement exactly the architecture your security team requires.

Performance Optimization in On-Premises MCP Deployments

Network Architecture

Properly architected on-premises MCP deployments leverage local network speeds to minimize latency. By placing MCP servers on the same network segments as the databases and APIs they access, you eliminate internet latency entirely.

| Network Path | Typical Latency |

|---|---|

| Same data center (local network) | < 1ms |

| Same region cloud provider | 5-20ms |

| Cross-region cloud provider | 50-150ms |

| International cloud connection | 100-300ms |

When AI agents make dozens of tool calls to satisfy a single user request, these milliseconds compound. Local network deployment can reduce total request time from seconds to hundreds of milliseconds—the difference between responsive and frustratingly slow user experiences.

Resource Allocation and Scaling

On-premises deployments give you direct control over resource allocation. You can dedicate specific hardware to high-priority MCP servers, allocate CPU, memory, and storage based on actual usage patterns, and over-provision for known peak periods without paying for unused capacity.

Containerization has become standard practice. Packaging MCP servers as Docker containers encapsulates all dependencies and runtime configurations, removing "it works on my machine" issues and ensuring consistency from development through to production.

Making the Deployment Decision

Choosing between cloud and on-premises MCP deployment requires careful analysis of your specific requirements, existing infrastructure, and long-term AI strategy. Organizations should evaluate:

- Regulatory and Compliance Requirements: Do data residency rules or audit requirements favor on-premises deployment?

- Cost Modeling: What will your MCP infrastructure cost at production scale over 3-5 years under different deployment models?

- Performance Requirements: How sensitive are your AI applications to tool execution latency?

- Security Posture: Does your security team have stronger controls and monitoring capabilities for on-premises or cloud infrastructure?

- Operational Capabilities: Does your IT organization have the expertise and resources to operate on-premises AI infrastructure?

The reality for many enterprises is that successful AI deployments leverage both cloud and on-premises infrastructure strategically, placing workloads where they make the most technical and economic sense.

Frequently Asked Questions

How does on-premises MCP deployment compare to cloud hosting for cost at enterprise scale?

For sustained workloads with consistent utilization, on-premises infrastructure typically offers better economics after 12-18 months. The fixed nature of capital expenditure combined with optimized utilization makes on-premises more cost-efficient over time, while cloud costs scale linearly with usage. Organizations should perform total cost of ownership analysis factoring in hardware costs, operational expenses, and long-term scalability.

What are the minimum infrastructure requirements for deploying an on-premises MCP system?

The hardware requirements include modern multi-core processors (Intel Xeon or AMD EPYC with 8+ cores), sufficient memory for containerized workloads, database infrastructure for authentication and logging, network bandwidth for MCP traffic, and storage for audit logs. The specific requirements scale based on the number of concurrent users and MCP servers you plan to deploy.

How does role-based access control work with AI agents in MCP deployments?

RBAC for AI agents operates similarly to human user access control but with higher granularity. The system validates every tool call against permission policies before execution, ensuring agents only access data appropriate to the user's role. Klavis AI's on-premises deployment includes a dedicated RBAC control panel that integrates with enterprise identity providers, allowing security teams to define permissions at the server, tool, and parameter levels.

Can on-premises MCP deployments integrate with cloud-based AI models?

Yes. Your MCP infrastructure can remain on-premises while AI models run in the cloud. The MCP protocol operates over standard HTTP connections, so AI applications hosted anywhere can connect to your on-premises MCP servers through secure VPN or direct connections. This hybrid approach lets you keep sensitive data processing on-premises while leveraging cloud compute for AI inference.

Interested in learning more about Klavis AI's on-premises MCP solutions? Contact our team to discuss your enterprise AI infrastructure needs.