Introduction: The Integration Gridlock in Modern AI Development

AI developers face a paralyzing challenge known as the "N x M integration problem," where every new AI model requires custom code to connect with every external tool. This combinatorial explosion of work is slow, expensive, and creates a mountain of technical debt that stifles innovation. The Model Context Protocol (MCP) offers an elegant solution—a universal standard that finally decouples models from tools. This article explores how MCP solves this integration nightmare and how new platforms are making it scalable for the real world.

The N x M Nightmare: A Developer's Dilemma

Let's be honest: building API integrations for AI is a grind. It's a world away from the exciting work of prompt engineering and model fine-tuning. Before MCP, connecting a new AI model to your company's toolset was a painful, repetitive cycle.

This old way of doing things is plagued with critical flaws:

- Exponential Complexity and Cost: The most obvious issue is the sheer number of integrations. The work scales quadratically, not linearly. As your company adopts more models or needs to connect to more tools, your maintenance burden explodes. Every API has its own authentication method, its own data schema, and its own rate limits, turning your codebase into a fragile patchwork of custom logic.

- Massive Development Overhead: Each integration is its own mini-project. Developers spend countless hours reading arcane API documentation, writing boilerplate code for authentication, and transforming data to fit the model's expected input. This is time that could be spent building core features, but instead, it's sunk into the plumbing of connectivity.

- The Constant Threat of Breakage: Third-party APIs are not static. A minor, unannounced change to a Salesforce endpoint or a modification in the GitHub API can bring your application to a grinding halt. Without a standardized contract, you are perpetually at the mercy of other companies' development cycles, leading to a reactive and stressful maintenance loop.

- Vendor Lock-In and a Fragmented Ecosystem: This lack of a common language has kept the AI world siloed. An integration built for OpenAI’s function-calling API won't work for Anthropic's tool use feature, and vice-versa. This forces developers to bet on a single ecosystem, stifling competition and making it difficult to switch to a better or more cost-effective model down the line.

To build the next generation of AI agents—ones that can truly act as autonomous assistants—we desperately needed to move from this N x M mess to a more logical and scalable N + M architecture.

MCP: The Universal Translator for the AI Ecosystem

Introduced by Anthropic in late 2024, the Model Context Protocol (MCP) is an open-source standard designed to be the definitive solution to the integration problem. It provides a universal interface that allows any AI application to discover, communicate with, and securely use any external tool or data source.

The genius of MCP lies in its simplicity. It operates on a client-server architecture built on top of the battle-tested and language-agnostic JSON-RPC 2.0 protocol.

- MCP Clients: These are embedded within your AI application (the "N" models). The AI only needs to learn to speak one language: MCP.

- MCP Servers: These act as wrappers around your external tools, databases, and APIs (the "M" applications). Each tool only needs one MCP server to make its capabilities available to the entire AI ecosystem.

The shift is dramatic and powerful:

| Before MCP (N x M Problem) | After MCP (N + M Solution) |

|---|---|

| Each model needs a custom connector for each tool. | Each model implements a single, standard MCP client. |

| Each tool requires a unique API wrapper for each model. | Each tool is exposed via a single, reusable MCP server. |

| 4 models x 10 tools = 40 integrations. | 4 models + 10 tools = 14 integrations. |

| Brittle, high-maintenance, and impossible to scale. | Robust, low-maintenance, and built for interoperability. |

By creating this standardized middle layer, MCP fully decouples the models from the tools. Developers are freed from the minutiae of individual APIs. As long as a tool has an MCP server, any AI application that speaks MCP can use it instantly. This open standard is fostering an explosion of collaboration and innovation, with a growing ecosystem of servers and clients.

Beyond Connectivity: The Challenge of Scale and Context Overload

MCP brilliantly solves the connectivity problem, transforming the N x M nightmare into a manageable N + M reality. But as we build more ambitious AI agents, a second, more subtle bottleneck emerges: tool overload.

What happens when your AI assistant needs to access not just ten tools, but hundreds or even thousands? A "naive" implementation of MCP would describe every single available tool and function to the LLM in every single request. This approach catastrophically breaks down at scale for several reasons:

- Context Window Explosion: Every LLM has a finite context window—the maximum number of tokens it can process at once. Describing thousands of API endpoints, their parameters, and their documentation can easily consume hundreds of thousands of tokens, completely overwhelming the model's context before the user's actual prompt is even considered.

- Skyrocketing Costs: In the pay-per-token world of LLMs, bigger context means bigger bills. Forcing the model to parse a massive list of tools on every turn is incredibly inefficient and can make your application prohibitively expensive to run.

- The Paradox of Choice: Just like humans, LLMs can get confused when presented with too many options. A massive toolset can lead to lower accuracy, incorrect tool selection, and even "hallucinated" function calls. The model spends more of its reasoning capacity trying to find the right tool than solving the user's problem.

Simply connecting to 1,000+ tools isn't enough. We need an intelligent way to help the model navigate this vast landscape without drowning in it.

Klavis AI & Strata: ONE Endpoint to Rule Them All

This is precisely the problem Klavis AI was built to solve. Our flagship product, Strata, is an intelligent, unified MCP server that tackles the tool overload crisis head-on. Instead of connecting your AI to dozens or hundreds of individual MCP servers, you connect it to one single Strata endpoint. Behind this endpoint, Strata manages your entire tool library and implements a revolutionary approach called Progressive Discovery.

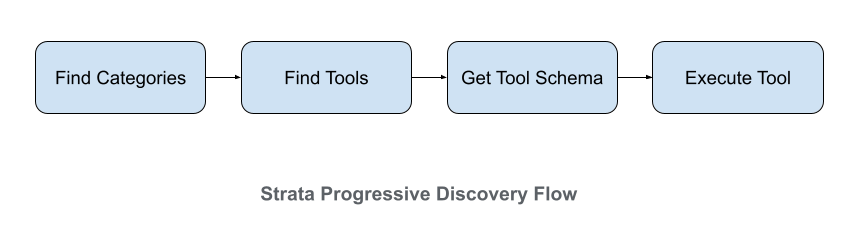

The flow of Strata Progressive Discovery Method

The flow of Strata Progressive Discovery Method

This process guides the AI agent through a logical, human-like reasoning funnel:

- Intent to Categories: The agent doesn't start with tools. It starts by asking Strata about high-level categories based on the user's request, like "issue tracking" or "email."

- Category Navigation: Strata provides a concise list of relevant services (e.g., Jira, Gmail). The agent can then drill down, asking for the specific actions available within the chosen service.

- Action Selection: The agent pinpoints the exact function it needs, such as

create_issueorsend_email. - Precise Execution: Only at the final step does Strata reveal the necessary API details and execute the call, ensuring accuracy and minimizing context size.

This guided process dramatically improves the reliability and efficiency of AI agents. The performance data speaks for itself. On the MCPMark benchmark, Strata achieves a +15.2% higher pass@1 rate compared to the official GitHub MCP server and a +13.4% higher rate than the official Notion server. In human evaluation tests on over 2,000 real-world queries, Strata consistently hits 83%+ accuracy across complex, multi-app workflows.

Get Started with Klavis Strata in Under Two Minutes

Klavis AI is built by developers, for developers. We offer several flexible pathways to get Strata integrated into your AI application, often in minutes.

The Hosted Service: Zero Setup, Instant Access

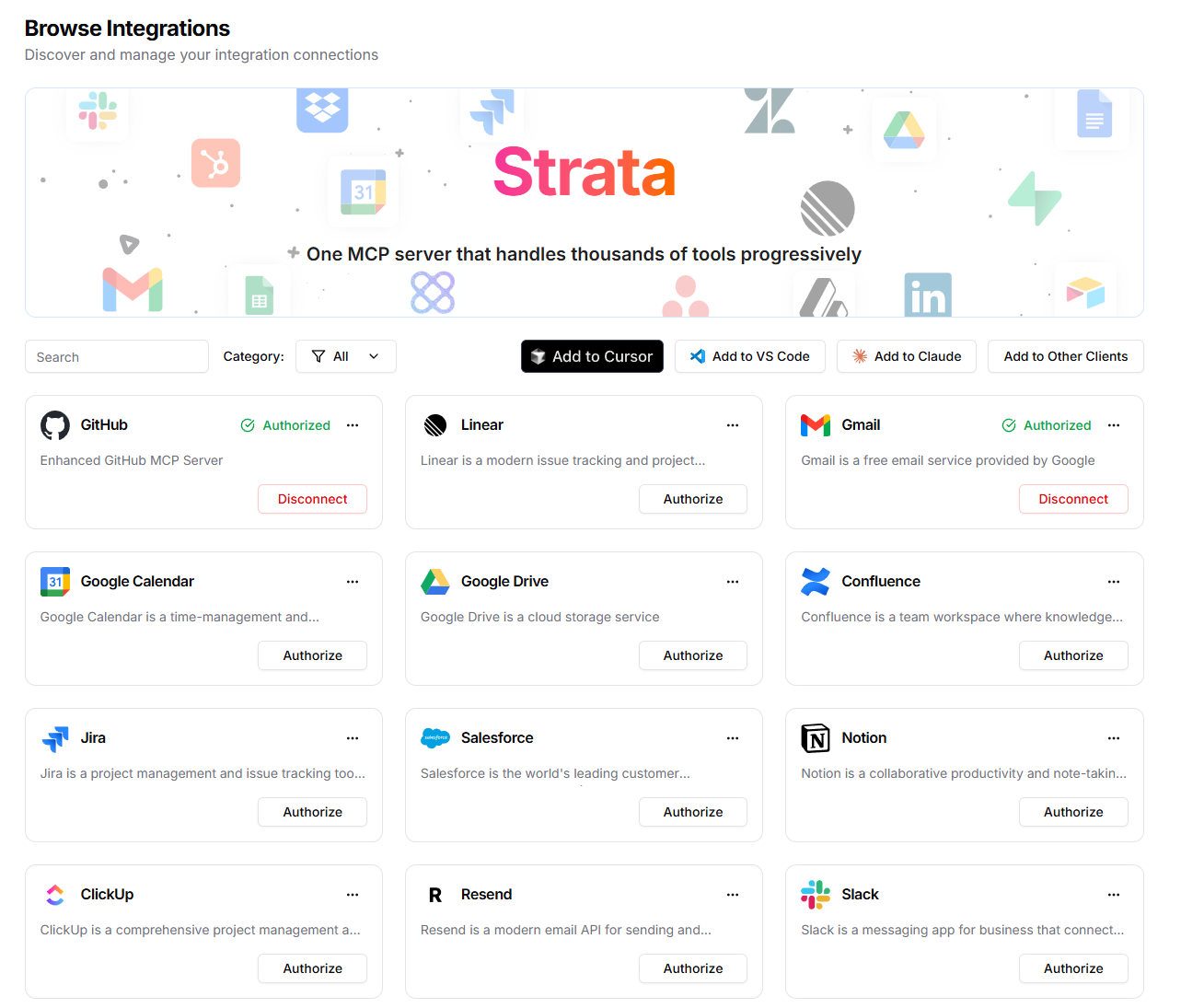

For the fastest integration, our hosted cloud service is the best choice. It's perfect for connecting with platforms like Claude Desktop, Cursor, or any other MCP-compatible client. Simply sign up on our website, select from our library of 50+ pre-built, production-ready MCP servers (for everything from GitHub and Slack to Salesforce), and get a secure Strata endpoint instantly.

Integrate with UI

You can create the Strata MCP via Klavis AI dashboard UI.

You can connect to 50+ applications with 1000+ tools with single Strata MCP

You can connect to 50+ applications with 1000+ tools with single Strata MCP

Integrate with SDK

For deep integration into your custom applications, our SDKs offer maximum power and flexibility. Here’s how simple it is to get started in Python:

# First, install the Klavis AI library

pip install klavis

from klavis import Klavis, McpServerName

# Initialize the client with your secure API key

klavis = Klavis(api_key="your-klavis-api-key")

# Create a unified Strata server for a specific user.

# This single endpoint intelligently routes requests to both Gmail and Slack.

strata_server = klavis.mcp_server.create_strata_server(

user_id="user-12345",

servers=[McpServerName.GMAIL, McpServerName.SLACK]

)

print(f"Strata server created successfully!")

print(f"Endpoint: {strata_server.endpoint}")

The Future is Standardized

The N x M problem has been a silent killer of productivity and innovation in the AI space. By embracing open standards like MCP, we can finally move beyond this bottleneck and build the next generation of powerful, interconnected AI agents. At Klavis AI, we are dedicated to accelerating this future by providing the robust, developer-friendly tools needed to make standardized AI integration a reality for everyone.

Frequently Asked Questions (FAQs)

Q1: How is MCP different from integration platforms like Zapier or MuleSoft? MCP is designed for the dynamic, conversational, and often unpredictable nature of AI agents, whereas platforms like Zapier excel at structured, trigger-based workflow automation. MCP establishes a real-time, bidirectional communication channel that allows an AI to discover, query, and interact with tools contextually during a task. It's less about pre-defined "if-this-then-that" logic and more about providing a universal language for AI to explore and act upon the digital world.

Q2: Is the Klavis AI platform built on open standards, or is it a proprietary system? Our entire platform is built on the foundation of the open-source. We are firm believers that open standards are essential for fostering a healthy, innovative ecosystem. While our managed Strata server provides enterprise-grade features like progressive discovery and multi-tenancy authentication, we also actively contribute to and maintain numerous open-source MCP servers that developers can freely use, modify, and self-host. Our mission is to add value on top of the open standard, not to lock users into a proprietary one.

Q3: How does Strata's "progressive discovery" handle security and permissions? Security is fundamental to our design. Strata doesn't bypass the native security model of the tools it connects to; it respects it. Authentication is handled via secure protocols like OAuth 2.0, ensuring that the AI agent can only perform actions that the authenticated user is permitted to perform. Also, Klavis AI is SOC 2 compliance, which means your data is secure.