Let's be honest, out of the box, even a powerhouse LLM like Anthropic's Claude 4 is stuck in a digital cage. Its knowledge is vast but static, frozen at the time of its last training run. It can't check your latest code commits, read a new document in Google Drive, or interact with live services. For developers aiming to build truly useful AI applications, that's a major roadblock.

The real magic happens when you give an LLM like Claude the ability to interact with the outside world—when you enable tool use. This capability is the engine driving the AI agent market, a sector projected to surge from around $7.9 billion in 2025 to over $98 billion by 2032.

This guide is for developers who want to unlock that magic. We’ll cut through the noise and show you exactly how to connect Claude to the tools you use every day with the Model Context Protocol (MCP). We'll cover what MCP is and provide practical, step-by-step walkthroughs for both no-code UI integration and deep programmatic control.

Why a Connected LLM is a Game-Changer

When Claude can interact with external systems, it graduates from a sophisticated text generator to an active agent in your workflows. It can stop telling you what to do and start doing it.

Imagine prompting Claude to:

- "Review the latest pull request in the 'frontend-app' repo, leave comments on potential issues, and if there are no conflicts, merge it."

- "Scan our Salesforce records for new leads from the past week, create a summary, and post it to the #sales channel in Slack."

- "Find all files in my Google Drive modified this month that mention 'Q4 Projections,' synthesize the key findings into a Google Doc, and share it with the finance team."

These are complex, multi-step tasks that were previously the domain of fragile, custom-coded scripts. With a standardized approach, they become simple instructions.

What Exactly is the Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is an open standard, first introduced by Anthropic in late 2024, designed to be a universal adapter for AI. It creates a secure and standardized way for LLMs to communicate with external data, applications, and services. Before MCP, developers faced the "N×M problem"—building a custom integration for every tool and every model. MCP solves this by creating a common language.

The architecture is a straightforward client-server model:

- MCP Host: The AI application itself (e.g., the Claude Desktop app, your custom Python script).

- MCP Client: The component in your app that talks to the server.

- MCP Server: An external service exposing tools and data (e.g., a GitHub MCP server).

This means once a tool has an MCP server, any MCP-compatible AI can use it instantly.

Method 1: Connecting Tools via the Claude UI (The No-Code Way)

The quickest way to get started is by connecting pre-built tools directly through the claude.ai web interface. This is perfect for activating common integrations without touching a line of code.

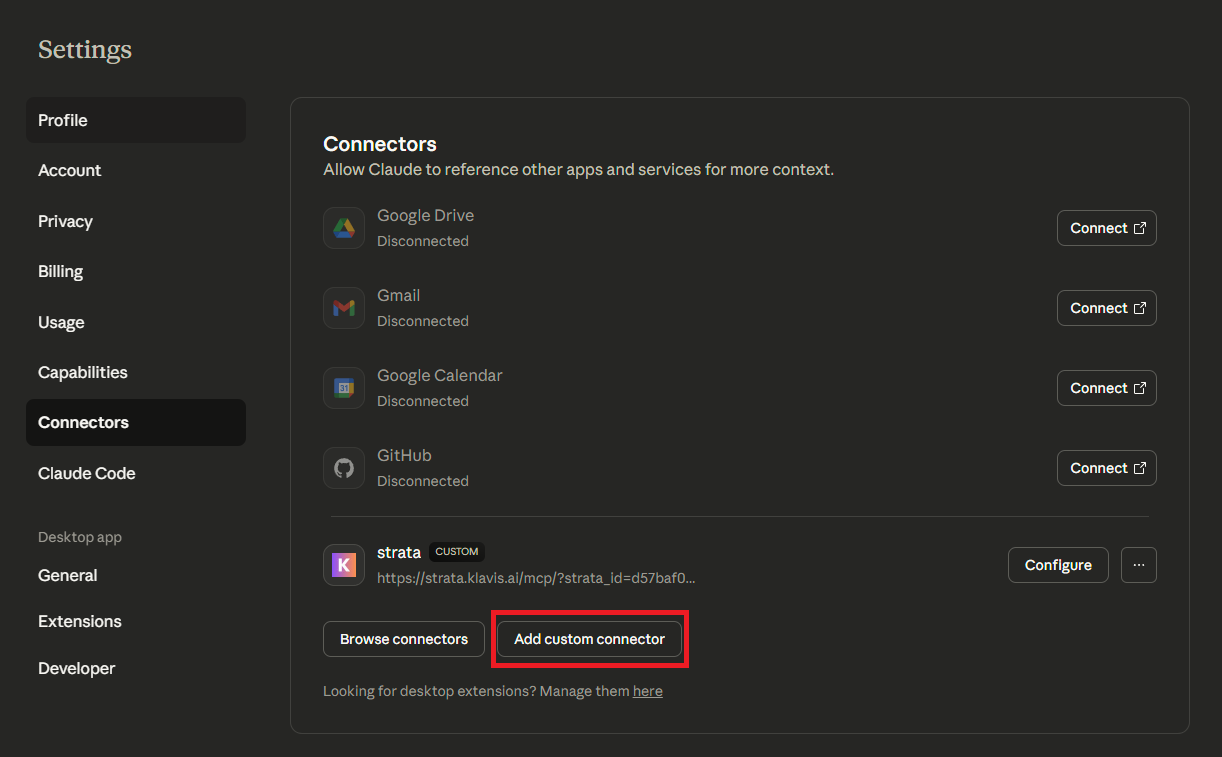

- Navigate to Settings: Log in to claude.ai, click your profile icon, and select "Settings."

- Open Connectors: Find and click on the "Connectors" section in the sidebar.

- Enable an Integration: Use Custom Connector and click "Add"

- Use in Chat: Once connected, you can use the tool in your conversations. The attachment icon (paperclip) will now let you select resources from your connected servers, allowing you to ask Claude to perform actions like summarizing a selected document.

This UI approach is fantastic for personal productivity, but to build custom applications or connect to your own internal APIs, you'll need to roll up your sleeves and code.

Method 2: A Developer's Guide to Programmatic MCP Integration

This is where the real power lies. Programmatic integration lets you connect Claude to any tool imaginable. Research shows that integrating external tools can dramatically improve LLM performance, with some tool-augmented models achieving high accuracy on complex reasoning and coding benchmarks.

Prerequisites

- Python 3.7+ installed.

- An Anthropic API key from the Anthropic Console.

- The

anthropicPython library (pip install anthropic). - Docker (for our self-hosting example).

Step 1: Get Your MCP Server Running

The MCP server is the bridge to your external tool. You have two main options.

Option A: Self-Host an Open-Source Server

The developer community is building a rich ecosystem of open-source MCP servers. Many are packaged as Docker containers, making them incredibly easy to run locally. For this example, we'll use a GitHub server.

Fire up a terminal and run:

# Pull and run a pre-built GitHub MCP server

docker pull ghcr.io/klavis-ai/github-mcp-server:latest

docker run -p 5000:5000 -e GITHUB_TOKEN=your_github_pat ghcr.io/klavis-ai/github-mcp-server:latest

(Just replace your_github_pat with a GitHub Personal Access Token).

You now have a server running on localhost:5000, ready to take instructions.

Option B: Use a Managed MCP Service

Running, securing, and scaling dozens of servers for production is a heavy lift. This is where managed infrastructure providers come in. A service like Klavis AI handles the complex parts—like secure OAuth 2.0 flows and multi-tenancy—so you can focus on your application logic. This approach gives you instant access to a massive library of production-ready tools.

| Category | Available MCP Servers |

|---|---|

| Development & DevOps | GitHub, GitLab, Jira, Linear, Vercel, Sentry, Cloudflare, PagerDuty |

| Communication & Collab | Slack, Discord, Gmail, Microsoft Teams, Google Calendar, Cal.com |

| Productivity & Docs | Notion, Confluence, Google Drive, Asana, ClickUp, Airtable, Monday.com |

| Business & CRM | Salesforce, HubSpot, Zendesk, QuickBooks, Stripe, DocuSign, Pipedrive |

| Data & Search | Postgres, Supabase, Brave Search, Tavily, Firecrawl, Exa, Perplexity |

| Marketing & Sales | Klaviyo, Resend, PostHog, Mixpanel, Gong, Close |

Step 2: The Code: Building the Tool-Use Loop

At its heart, a tool-using application is a conversation between your code, Claude, and the MCP server. The logic is a loop: you send a prompt, Claude asks to use a tool, you execute it, and you send the result back to Claude so it can form a final answer.

Here’s a Python script that does just that, connecting to our local GitHub MCP server.

import os

import json

from anthropic import Anthropic

from klavis import Klavis

from klavis.types import McpServerName, ToolFormat

klavis_client = Klavis(api_key=os.getenv("KLAVIS_API_KEY"))

response = klavis_client.mcp_server.create_strata_server(

servers=[McpServerName.GMAIL, McpServerName.SLACK],

user_id="1234"

)

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY" # Replace

os.environ["KLAVIS_API_KEY"] = "YOUR_KLAVIS_API_KEY" # Replace

def claude_with_mcp_server(mcp_server_url: str, user_query: str):

claude_client = Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))

messages = [

{"role": "user", "content": f"{user_query}"}

]

mcp_server_tools = klavis_client.mcp_server.list_tools(

server_url=mcp_server_url,

format=ToolFormat.ANTHROPIC

)

max_iterations = 10

iteration = 0

while iteration < max_iterations:

iteration += 1

response = claude_client.messages.create(

model="claude-4-sonnet",

max_tokens=4000,

system="You are a helpful assistant. Use the available tools to answer the user's question.",

messages=messages,

tools=mcp_server_tools.tools

)

messages.append({"role": "assistant", "content": response.content})

if response.stop_reason == "tool_use":

tool_results = []

for content_block in response.content:

if content_block.type == "tool_use":

function_name = content_block.name

function_args = content_block.input

print(f"🔧 Calling: {function_name}, with args: {function_args}")

result = klavis_client.mcp_server.call_tools(

server_url=mcp_server_url,

tool_name=function_name,

tool_args=function_args

)

tool_results.append({

"type": "tool_result",

"tool_use_id": content_block.id,

"content": str(result)

})

messages.append({"role": "user", "content": tool_results})

continue

else:

return response.content[0].text

return "Max iterations reached without final response"

result = claude_with_mcp_server(

mcp_server_url=response.strata_server_url,

user_query="Check my latest 5 emails and summarize them in a Slack message to #general"

)

print(f"\n🤖 Final Response: {result}")

Security is Not an Option

When you give an LLM keys to your kingdom, you need to be smart about security.

- Least Privilege: Tokens and API keys should have the absolute minimum permissions they need to function. No more.

- Sanitize Everything: Treat all output from an LLM as untrusted. Validate and sanitize it before it gets anywhere near a database query or another API call.

- Human-in-the-Loop: For any action that is sensitive or irreversible (like deleting data or making a payment), add a human confirmation step.

Frequently Asked Questions (FAQs)

Q1: Why not just write my own API wrappers instead of using MCP? You could, but you'd be reinventing the wheel every time. MCP standardizes authentication, error handling, and function definitions. It's about building a scalable, interoperable system, not a collection of one-off scripts. An MCP server you build once can be used by any MCP-compatible AI, not just Claude.

Q2: How does Claude 4's tool use capability stack up?

Claude 4 represents a significant leap forward in tool use, making it a powerful engine for building sophisticated AI agents. Anthropic introduced several key upgrades, including a feature called "extended thinking," which allows the model to alternate between reasoning and using tools like web search to formulate better answers.

Q3: Can I connect Claude to my company's internal APIs with MCP? Yes, and this is one of MCP's killer use cases. You can build a private MCP server that acts as a secure gateway to your internal services. This gives your AI agents access to proprietary business logic and data without exposing those systems directly.

The Bottom Line

Connecting Claude to external tools with the Model Context Protocol is how you move from building interesting demos to creating powerful, real-world AI agents. By embracing an open standard like MCP, your applications become more capable, reliable, and future-proof. The era of the disconnected LLM is over; the future is about building bridges.