That Jira ticket isn't going to update itself.

You’re deep in the zone, code flowing from your fingertips. Your mental model of the system is complete, the solution to a tricky bug finally clicking into place. Then, the Slack notification pops up: "Hey, can you update the Jira ticket for that?"

The flow state shatters. You switch windows, hunt for the right project, find the ticket, and try to summarize the complex work you were just doing. By the time you get back to your IDE, the magic is gone. That intricate mental model has dissolved.

This isn't just frustrating; it's a massive productivity killer. Research from the University of California found it takes over 23 minutes to regain focus after an interruption. This "developer tax"—the constant context switching between coding and administrative tasks—is something we’ve all learned to live with. But what if we didn't have to?

What if you could simply type in your IDE's terminal: ai: create a bug ticket for the auth failure, assign it to me, and link it to the Q4 epic, and just... keep coding?

This guide will show you how to build exactly that. We'll walk through connecting a Large Language Model (LLM) to Jira using the Model Context Protocol (MCP), an open standard designed to let AI agents securely interact with tools like the ones you use every day.

Eliminating the "Developer Tax": The Real Value of Jira Automation

Integrating AI with Jira is about more than just saving a few clicks. It's a fundamental upgrade to your team's development engine. The constant back-and-forth between your code and your project board is a silent killer of momentum. We call this the "developer tax"—the cognitive overhead of administrative tasks that drains energy and pulls you out of deep work.

When you're in a state of flow, you're solving complex problems efficiently. Breaking that flow to update a ticket is expensive. It's not just the two minutes it takes to edit the ticket; it's the twenty minutes it takes to get your head back in the code.

By automating this friction away, you're not just improving convenience; you're buying back your team's most valuable and finite resource: uninterrupted focus. The difference is stark.

| Metric | Manual Jira Management (The Status Quo) | AI-Powered Jira via MCP (The Smart Way) |

|---|---|---|

| Developer Focus | Constantly broken by context switching. "I'll update the ticket later." | Flow state is preserved. Jira updates happen via a quick command in-editor. |

| Task Accuracy | Prone to human error—forgotten fields, inconsistent formatting, delayed updates. | Automated and perfectly consistent every time. Updates are real-time. |

| Speed | Minutes per ticket to navigate the UI, click through screens, and type. | Seconds to issue a natural language command. |

| Reporting | Requires manually building JQL queries and exporting data to get insights. | Ask questions in plain English: "Show me all high-priority bugs in the current sprint." |

| Team Onboarding | New hires need to be trained on your team's specific Jira workflows and board setups. | The interface is intuitive from day one: just tell the AI what you need. |

| Overall Impact | A constant, low-level drain on energy that slows down the entire development cycle. | Frees up cognitive bandwidth for what matters: solving problems and building software. |

Building Bridges, Not Boilerplate: The Smart Way to Integrate with MCP

So, you're sold on the idea. How do you actually connect an LLM to Jira?

The obvious first thought is often the most painful: diving straight into Jira's REST API. This path is a minefield of one-off scripts, tangled dependencies, and late nights spent "spelunking" through API documentation. You'd be responsible for wrestling with Jira's specific OAuth 2.0 implementation, writing brittle code to format every request, and parsing every unique response. It’s a huge time sink, and the moment you want to connect another tool or try a new AI model, you have to start the whole painful process over again.

This is the old way. It's a recipe for technical debt.

The smart way is to use the Model Context Protocol (MCP).

Think of MCP as a universal adapter for AI. It’s an open-source standard that sits between your AI and your tools, providing a clean, consistent way for them to communicate. Instead of a messy point-to-point connection, you get a simple, elegant architecture:

- Your AI Application (The MCP Client): This is your code, which tells an LLM what to do. It doesn't need to know anything about Jira's API.

- The MCP Server: This is the translator. It takes a simple command from your AI (like

create_issue) and handles all the complex logic of turning that into a secure, properly formatted Jira API call.

This approach decouples your agent's logic from the tool's implementation. It’s a more robust, scalable, and secure way to build. You handle the tool integration once, and then any AI can use it.

Your AI Agent's Toolkit

To build our Jira assistant, we only need three things:

- A Large Language Model (LLM): The brain. Any modern LLM with function-calling capabilities will work (GPT-5, Claude 4, Gemini, etc.).

- An MCP Client: An MCP client is the interface you interact with LLM, which also connect to the MCP server. There are several commonly used MCP client available like

- A Jira MCP Server: The bridge to Jira.

The server is the key component. It needs to expose all the common Jira actions as simple "tools" the LLM can understand.

The "Build vs. Use" Decision

This is where you have a choice. You can build a Jira MCP server from scratch, which means spending significant time on infrastructure, security, and wrestling with Jira's OAuth 2.0 flow.

Or, you can use a pre-built, managed solution and get started in minutes. This is what we focus on at Klavis AI. We provide an open-source Jira MCP server that's production-ready, secure, and handles all the authentication headaches. This lets you skip the plumbing and get straight to building your agent.

A production-ready Jira server should offer a comprehensive toolkit like this:

| Tool Name | What It Does |

|---|---|

jira_search | Find issues using Jira Query Language (JQL). |

jira_get_issue | Pull the full details for a specific ticket. |

jira_create_issue | Create a new ticket, story, or bug. |

jira_update_issue | Change a ticket's status, assignee, or other fields. |

jira_add_comment | Add a comment to an issue. |

jira_get_sprints_from_board | See what's in the current or upcoming sprint. |

Klavis AI offers the strata MCP, which is a unified MCP server that provides many more tools than the above ones. Meanwhile, it also provides the progressive discovery feature to guide the LLM to find the right tool step by step. It helps complex workflow to solve the context overload problem and boost the task success rate.

Get MCP Server With a Few Clicks

As we mentioned, use a pre-built MCP would be a good choice for you. Here is the step by step guides how you can use Klavis AI MCP with a few clicks.

Step 1: Sign Up with Klavis AI

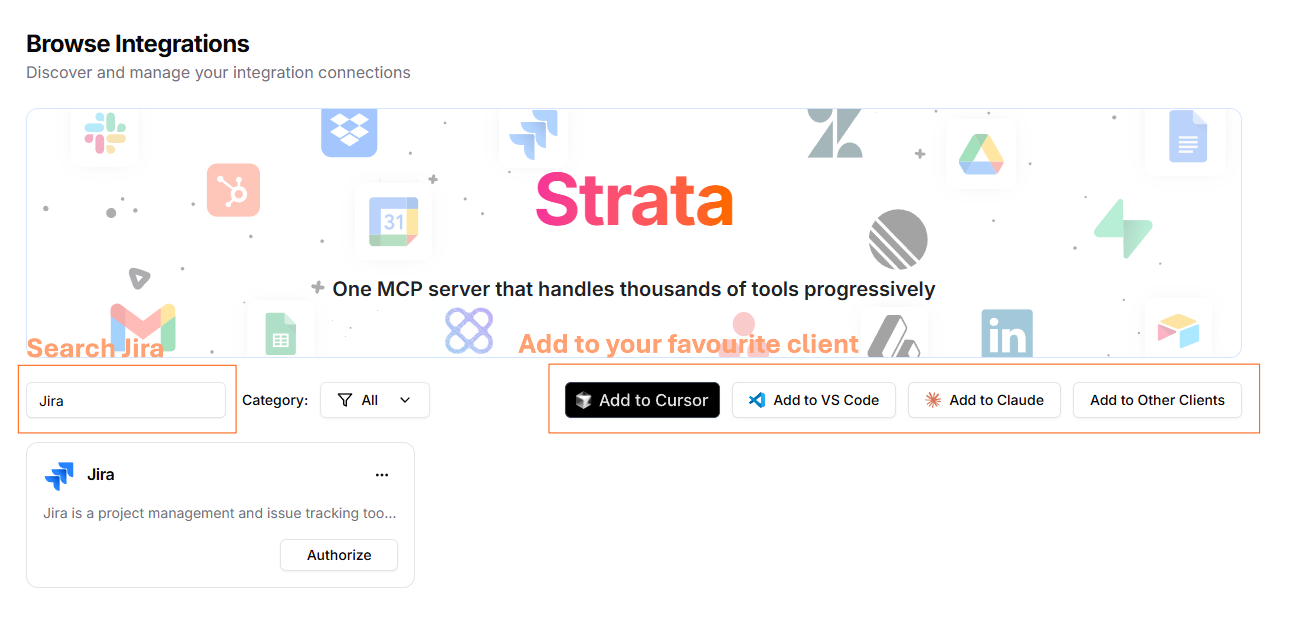

Sign up with Klavis AI, then you will be navigated to your home page with multiples MCP integration available.

Step 2: Find the Jira MCP

Search Jira in the search box, you will find Jira MCP.

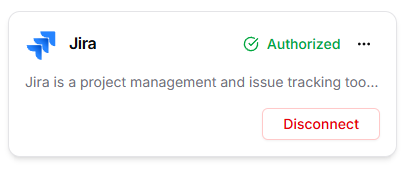

Step 3: Authorize MCP with OAuth 2

Click Authorize, then log in with your Jira account. Klavis AI handle the authorization for you with OAuth 2. After you log in with your Jira, you will find your Jira MCP is authorized.

Step 4 : Integrate the MCP with your favorite MCP client

Click to Integrate the MCP with Cursor, VS Code, Claude or other clients. Then enjoy the powerful Jira agent!

Beyond the Ticket: High-Impact Use Cases for Your Jira AI Agent

Now that you have the foundation—an LLM connected to Jira via a secure MCP server—you can move beyond simple commands. You're ready to build a powerful AI agent that automates entire workflows, saving your team time and mental energy.

Here are a few high-impact use cases to show you what's possible.

1. Automated Sprint Planner

This agent analyzes your backlog and team velocity to automatically draft a well-formed sprint, saving hours of manual planning.

"Draft a new two-week sprint for the 'Phoenix Project' based on our 28-point velocity, prioritizing all P0 bugs before filling the rest with top-ranked stories from the 'Q4 Features' epic."

"Find all stories in the backlog with the 'needs-grooming' label that are missing story point estimates and list them for review."

2. Intelligent Triage Engineer

This agent acts as a first responder, automatically investigating, enriching, and routing new bug reports to the correct developer.

"A critical bug was just reported for a '500 error on payment confirmation'. Search for duplicates, then create a P0 ticket, label it 'payments' and 'critical-outage', and assign it to the on-call payments engineer."

"Find the developer who last committed to the

PaymentProcessor.jsfile and suggest them as the assignee for ticket BUG-1234."

3. On-Demand Reporting Analyst

This agent provides a natural language interface to your Jira data, allowing anyone to get immediate project status updates without writing JQL.

"Generate a progress summary for the 'New User Onboarding' epic, listing all completed stories, what's in progress, and any blocked tickets."

"Show me any tickets on the Core-API board that have been 'In Review' for more than 48 hours and @-mention the assignee in a comment on each one."

Frequently Asked Questions

1. What's a Jira MCP Server, really? It's a small, dedicated web service that acts as a secure translator. It takes simple, standardized requests from an AI (like "create an issue") and converts them into the specific, complex API calls that Jira understands.

2. Can I use this with ChatGPT, Claude, or other LLMs? Yes. That's the point of MCP. It's a universal standard. As long as your AI application can make a standard web request, it can talk to any MCP server, regardless of the underlying LLM.

3. Is this secure? I don't want an AI going rogue in my Jira. Absolutely. Security is central to MCP. A well-built server uses OAuth 2.0, meaning the AI agent authenticates as you. It inherits all your existing permissions in Jira and can't see or do anything you're not already allowed to.

4. Why is this better than just calling the Jira API directly? It's about abstraction and future-proofing. With MCP, you build your tool integration once. Then you can plug in any AI, now or in the future. It saves you from writing mountains of boilerplate code and locks you out of vendor-specific ecosystems.