The AI development landscape shifted dramatically on March 1, 2025, when LangChain released the MCP Adapters package, solving one of the most persistent challenges in building AI agents: connecting them to external tools without writing custom integration code for every single API.

If you've built AI agents that need to check Gmail, query GitHub, update Salesforce, and post to Slack—all in one workflow—you understand the pain. Each integration demands custom authentication logic, unique request formats, and endless debugging sessions. The Model Context Protocol (MCP) eliminates this friction by providing a universal interface, and LangChain's MCP adapters make it accessible to the entire Python AI ecosystem.

Understanding LangChain MCP Adapters: The Bridge to External Tools

LangChain MCP Adapters convert MCP tools into LangChain- and LangGraph-compatible tools, enable interaction with tools across multiple MCP servers, and seamlessly integrate the hundreds of tool servers already published into LangGraph agents. Think of it as the translation layer that lets your LangChain agents speak the universal language of MCP.

How It Works

The architecture is straightforward:

- Connection: Your LangChain agent connects to MCP servers using

MultiServerMCPClient - Discovery: The adapter automatically fetches tool definitions from each server

- Registration: Tools are converted to LangChain-compatible format

- Execution: Your agent calls these tools like any native LangChain tool

The adapter allows agents to pull from multiple MCP servers at once, making it easier to combine different tools for more powerful applications.

Quick Start: Building Your First Agent with Klavis AI

Let's build a practical agent using Klavis AI's MCP infrastructure, which provides production-ready MCP servers with built-in OAuth support. Klavis AI eliminates authentication headaches, letting you focus on agent logic rather than OAuth flows.

Installation and Setup

First Install the necessary packages:

pip install langchain-mcp-adapters langgraph langchain-openai klavis

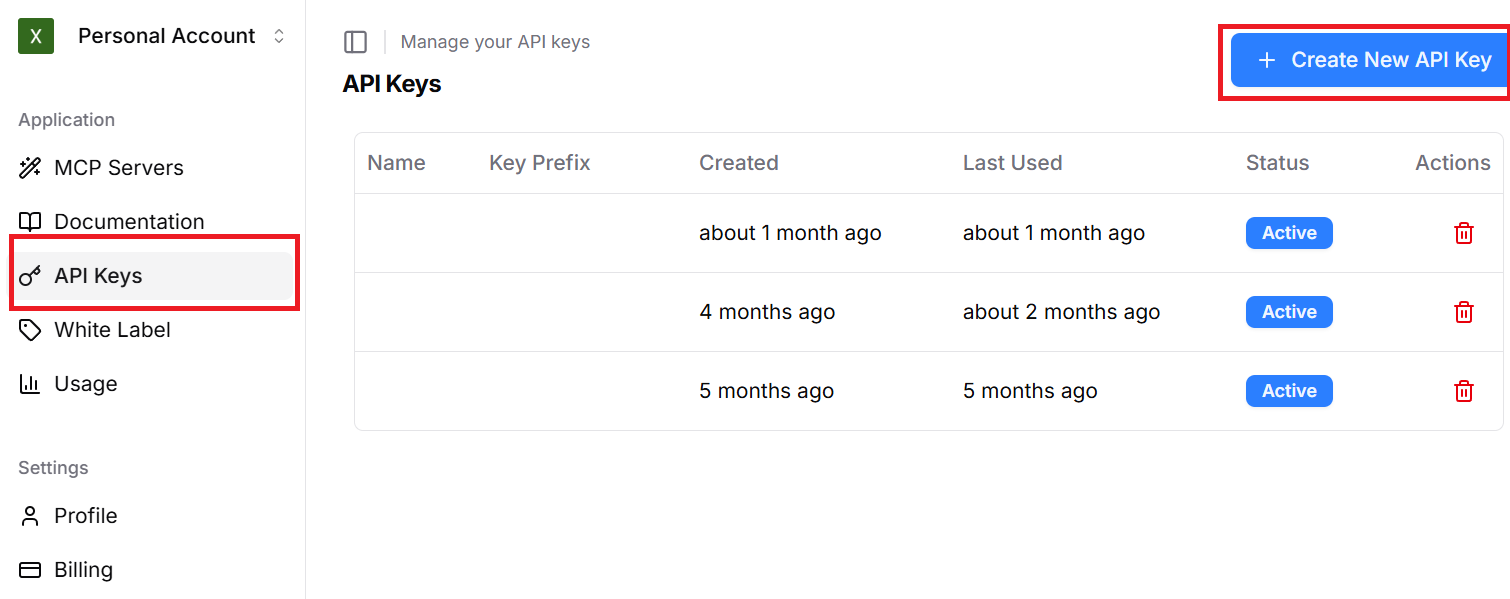

Then get your API key from Klavis AI:

Then set your API keys as environment variables:

import os

os.environ["OPENAI_API_KEY"] = "your_openai_api_key"

os.environ["KLAVIS_API_KEY"] = "your_klavis_api_key"

Creating a Strata MCP Server

Klavis AI's flagship product, Strata, addresses the "tool overload" problem through progressive discovery. Instead of overwhelming agents with hundreds of tools simultaneously, Strata guides them through categories step by step. According to Klavis AI's benchmarks, this approach achieves a 13.4% higher pass@1 rate compared to official Notion MCP servers and 15.2% higher than GitHub's.

Here's how to create a Strata MCP server with Gmail and Slack integrations:

from klavis import Klavis

from klavis.types import McpServerName

import webbrowser

klavis_client = Klavis(api_key=os.getenv("KLAVIS_API_KEY"))

response = klavis_client.mcp_server.create_strata_server(

servers=[McpServerName.GMAIL, McpServerName.SLACK],

user_id="demo_user_123"

)

# OAuth authorization handled automatically

if response.oauth_urls:

for server_name, oauth_url in response.oauth_urls.items():

webbrowser.open(oauth_url)

Building the Agent

Below is a complete example of building an agent that checks your latest 5 emails and summarizes them in a Slack channel using the LangChain MCP adapter. You can read more from the official documentation.

import asyncio

from langchain_mcp_adapters.client import MultiServerMCPClient

from langgraph.prebuilt import create_react_agent

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(model="gpt-5")

mcp_client = MultiServerMCPClient({

"strata": {

"transport": "streamable_http",

"url": response.strata_server_url

}

})

tools = asyncio.run(mcp_client.get_tools())

agent = create_react_agent(llm, tools)

# Execute complex workflow

response = asyncio.run(agent.ainvoke({

"messages": [{

"role": "user",

"content": "Check my latest 5 emails and summarize them in Slack #general"

}]

}))

This single invocation chains multiple actions: retrieves emails from Gmail, analyzes content, and posts a summary to Slack—all without custom API code.

Klavis AI MCP Integration Ecosystem

Klavis AI provides production-ready MCP servers with enterprise OAuth support:

| Category | Integrations | Primary Use Cases |

|---|---|---|

| Development Tools | GitHub, GitLab, Linear, Jira | Issue tracking, PR management, sprint planning |

| Communication | Slack, Discord, Gmail, Outlook | Team notifications, email automation, message routing |

| Business Applications | Salesforce, HubSpot, Notion, Airtable | CRM updates, contact management, knowledge bases |

| Cloud & Storage | Google Drive, Dropbox, Box, Supabase | File operations, document retrieval, database queries |

| Analytics & Monitoring | PostHog, Mixpanel, PagerDuty | Event tracking, user behavior analysis, incident management |

Each integration includes built-in OAuth, eliminating weeks of authentication development work.

Real-World Use Cases: Where LangChain MCP Adapters Excel

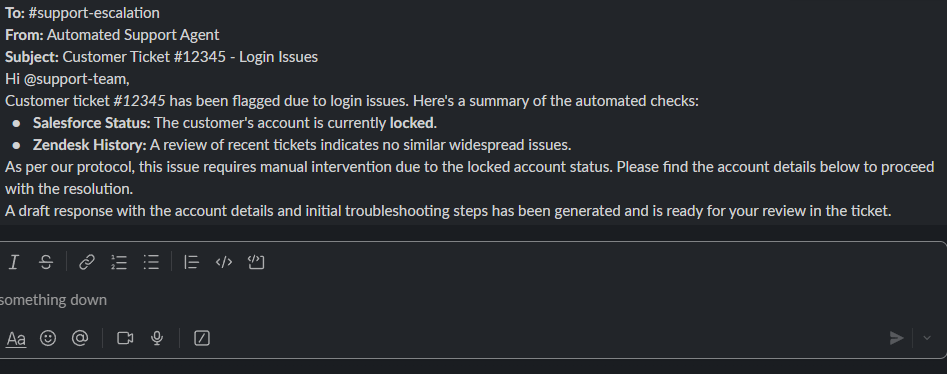

Use Case 1: Automated Customer Support Escalation System

Challenge: A SaaS company receives hundreds of support tickets daily. Support engineers need to check multiple systems (CRM, product database, billing system) to resolve issues, causing delays and inconsistent responses.

Solution with Klavis AI MCP:

# Create unified MCP server with customer support tools

klavis_client = Klavis(api_key=os.getenv("KLAVIS_API_KEY"))

response = klavis_client.mcp_server.create_strata_server(

servers=[

McpServerName.SALESFORCE, # Customer records

McpServerName.ZENDESK, # Ticket system

McpServerName.SLACK # Team notifications

],

user_id="support_agent_001"

)

# Build intelligent support agent

agent = create_react_agent(llm, tools)

# Agent workflow

ticket_response = await agent.ainvoke({

"messages": [{

"role": "user",

"content": """

Customer ticket #12345 reports login issues.

1. Check Salesforce for customer account status

2. Review recent tickets in Zendesk for similar issues

3. If account is locked or has billing issues, notify #support-escalation on Slack

4. Generate response with account details and resolution steps

"""

}]

})

Results:

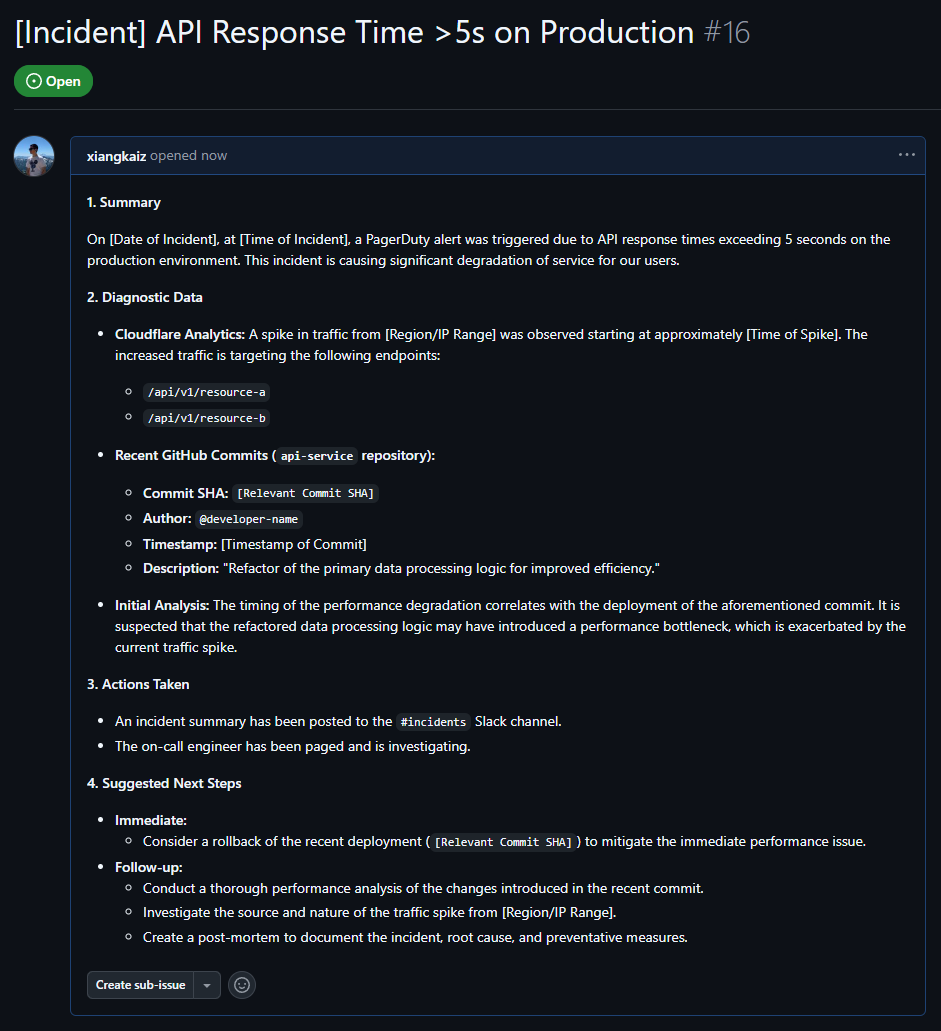

Use Case 2: DevOps Incident Management and Resolution

Challenge: When production incidents occur, DevOps teams scramble across GitHub (code), PagerDuty (alerts), Slack (coordination), and cloud platforms (infrastructure). Manual context-switching wastes critical minutes.

Solution with Klavis AI MCP:

# Multi-service DevOps agent

response = klavis_client.mcp_server.create_strata_server(

servers=[

McpServerName.GITHUB,

McpServerName.PAGERDUTY,

McpServerName.SLACK,

McpServerName.CLOUDFLARE

],

user_id="devops_team"

)

agent = create_react_agent(llm, tools)

# Automated incident response

incident_response = await agent.ainvoke({

"messages": [{

"role": "user",

"content": """

PagerDuty alert: API response time >5s on production.

1. Check recent GitHub commits to api-service repository

2. Review Cloudflare analytics for traffic patterns

3. Post incident summary to #incidents Slack channel

4. Create GitHub issue with diagnostic data

5. Suggest rollback if recent deployment detected

"""

}]

})

Results:

Use Case 3: Sales Intelligence and Lead Enrichment Pipeline

Challenge: Sales teams manually research leads across LinkedIn, company websites, CRM systems, and email threads—spending 3-4 hours per qualified lead on data gathering rather than actual selling.

Solution with Klavis AI MCP:

# Sales intelligence agent

response = klavis_client.mcp_server.create_strata_server(

servers=[

McpServerName.SALESFORCE, # CRM

McpServerName.GMAIL, # Email history

McpServerName.HUBSPOT, # Marketing data

McpServerName.FIRECRAWL_WEB_SEARCH # Web research

],

user_id="sales_rep_042"

)

agent = create_react_agent(llm, tools)

# Automated lead enrichment

lead_analysis = await agent.ainvoke({

"messages": [{

"role": "user",

"content": """

New lead: xxxxxx@techcorp.com from TechCorp

1. Search web for TechCorp recent news and funding

2. Check Gmail for any previous communication history

3. Query Salesforce for existing TechCorp contacts

4. Check HubSpot for TechCorp website activity

5. Draft personalized outreach email based on findings

"""

}]

})

Results:

Hi John,

I hope this email finds you well.

I saw the recent news about TechCorp's Series B funding – congratulations to you and the team on that significant milestone. It sounds like you're in a period of exciting growth, especially with your expansion into the APAC market.

I'm reaching out because I noticed someone from TechCorp spent some time on our pricing and integration pages on HubSpot yesterday. My understanding from your LinkedIn profile is that you oversee the new market expansion initiatives, and I imagine that scaling your team's operational efficiency is a top priority for you right now.

I also saw in our system that my colleague, Jane Doe, had a brief conversation with Sarah Jenkins from your product team a few months ago.

Given your focus on expansion, I thought you might be interested in how we've helped other SaaS companies like [Similar Customer 1] and [Similar Customer 2] streamline their workflows by up to 30% during periods of rapid growth.

Would you be open to a brief 15-minute chat next week to explore how [Your Company Name] might be able to support TechCorp's goals?

Best regards,

[Your Name]

[Your Title]

Comparing Approaches

| Approach | Development Time | Maintenance Burden | Flexibility | Ecosystem Size |

|---|---|---|---|---|

| LangChain MCP Adapters | Low | Low | High | 100+ servers |

| Direct API Integration | High | High | High | Unlimited |

| Native Function Calling | Medium | Medium | Medium | Provider-limited |

| Custom Frameworks | Medium | High | Medium | Limited |

MCP connects AI apps to context while building on top of function calling—the primary method for calling APIs from LLMs—to make development simpler and more consistent. The protocol simply standardizes how this API feature works, adding context for the LLM.

Frequently Asked Questions

How does LangChain MCP differ from native function calling?

MCP is complementary to frameworks, shifting standardization to be model-facing. With MCP, an agent can discover and use any tool that an MCP server provides, even if the agent's code didn't explicitly include that tool ahead of time. Native function calling requires predefined schemas; MCP enables dynamic discovery.

Can I use LangChain MCP adapters with non-OpenAI models?

Yes. The adapters work with any LangChain-compatible model including Anthropic's Claude, Google's Gemini, or open-source models via Ollama. The protocol is model-agnostic by design.

What's the performance overhead compared to direct APIs?

Minimal. MCP servers are typically HTTP services with minimal dependencies. They add only a few milliseconds of overhead per call, keeping end-to-end latency competitive with direct REST APIs.