Your customer support team is likely drowning in a sea of tickets, context-switching between a dozen tabs to resolve a single issue. The result? Slow response times and frustrated customers. Standard chatbots barely scratch the surface. The real breakthrough comes from connecting Zendesk directly to a Large Language Model (LLM), creating an AI agent that doesn't just answer questions—it takes action. This guide will walk you through why this integration is critical, how to architect it using a Model Context Protocol (MCP), and provide practical, verifiable use cases you can build.

The High Cost of Context Switching: Why Manual Support Fails at Scale

In customer support, speed and efficiency are everything. According to Zendesk's 2024 CX Trends report, 73% of customers say that quick resolution time is their top priority. Yet, the reality for most support agents is a frantic scramble for information. A single ticket about a billing error might require them to:

- Open Zendesk to read the customer's complaint.

- Switch to Stripe or another payment processor to verify transaction history.

- Check an internal admin panel to see subscription status.

- Potentially open Jira to see if it’s a known bug.

- Finally, return to Zendesk to craft a response.

This manual process is slow, error-prone, and doesn't scale. The financial impact is staggering. Research shows that bad customer experiences put a potential $3.7 trillion in revenue at risk globally. The core issue isn't a lack of effort from agents; it's a broken workflow.

As a Harvard Business Review study famously pointed out, customers value effort reduction far more than being "delighted". An LLM-powered agent that can resolve an issue in 30 seconds is infinitely more valuable than a human agent who takes 30 minutes, no matter how friendly they are.

| Metric | Manual Support Workflow | LLM-Augmented Workflow | Improvement |

|---|---|---|---|

| Avg. Resolution Time | 24-48 hours (or more) | < 5 minutes for common issues | >95% Reduction |

| Agent Context Switches | 5-10 apps per ticket | 0 (LLM accesses tools directly) | 100% Reduction |

| First Response Time | Hours | Seconds | Near-Instant |

| Operational Hours | 8-12 hours/day | 24/7/365 | 2-3x Coverage |

| Cost per Interaction | High (agent salary + tools) | Low (API calls + infrastructure) | Significant |

This data makes the case clear: augmenting your support stack with intelligent, action-oriented AI is no longer a luxury—it’s a competitive necessity.

The Bridge to Intelligence: Integrating Zendesk with an LLM Core

So, how do you give an LLM the tools it needs to interact with Zendesk? A common first thought is to simply call the Zendesk REST API from a Python script. While feasible for simple tasks, this approach quickly becomes brittle. You end up writing tons of glue code, managing authentication complexity, and facing a massive challenge: how do you teach the LLM what tools are available and when to use them?

This is where a Model Context Protocol (MCP) becomes essential. MCP is an open standard designed to let LLMs securely and reliably connect to external tools and data sources. Think of it as a universal adapter for AI. Instead of just exposing an API endpoint, an MCP server provides the LLM with a structured, discoverable set of capabilities.

However, even with MCP, a problem arises when you connect many tools. Presenting an LLM with hundreds of possible actions at once (from Zendesk, Jira, Stripe, etc.) can lead to "tool overload," causing confusion and incorrect actions.

This is the problem Klavis Strata is designed to solve. Strata is a unified MCP server that guides the AI agent through a progressive discovery process:

- Intent Recognition: The LLM first identifies the user's high-level goal (e.g., "billing issue").

- Tool Navigation: Strata guides the model to the relevant category of tools (e.g., "Payment Tools").

- Action Selection: The model is then presented with specific actions within that category (e.g.,

find_charge,process_refund). - Execution: Only then is the full API detail revealed for execution.

This tiered approach dramatically improves accuracy and allows developers to build sophisticated, multi-app agents without overwhelming the model. Instead of building and managing dozens of individual connections and authentication flows, you can use a single, secure, and intelligent gateway.

Your Step-by-Step Integration Guide

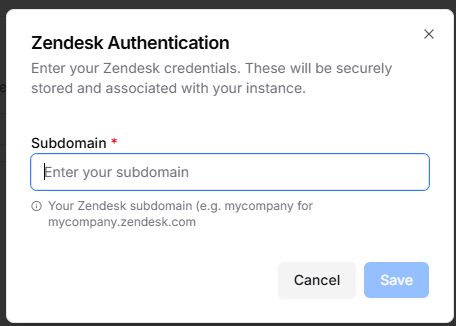

- Login to Klavis AI and go to your account.

- Find the Zendesk integration, and then click Authorize.

- Enter your Zendesk subdomain and follow the OAuth flow to connect your account. Once authorized, it will show "Authorized" status.

- Clicking the button on the page to connect to the agents of your choice. You can also get the MCP URL and connection token by clicking Add to other clients.

From Theory to Practice: Actionable LLM Agent Use cases for Zendesk

Let's move beyond the abstract and look at what your intelligent Zendesk agent can actually do. These are not simple Q&A bots; these are autonomous agents that execute workflows across different applications. The key is to start with clear, simple commands that trigger powerful, behind-the-scenes actions.

Use Case 1: Capture Actionable Requests from Team Chat

Important customer feedback and urgent requests often originate in internal chat applications like Slack or Microsoft Teams. These valuable insights can easily get lost in a sea of conversation. An LLM agent can act as a bridge, ensuring every important request is formally tracked.

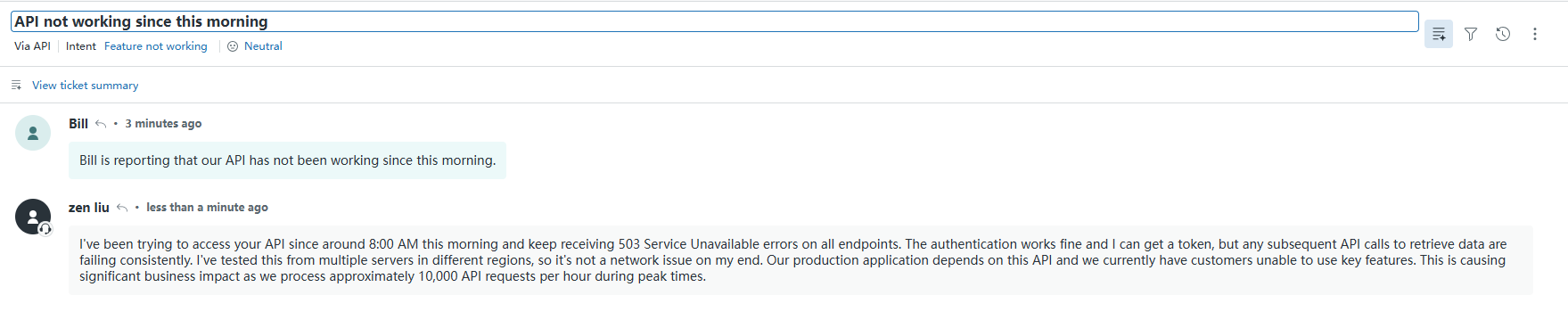

Scenario: A sales team member is talking to a high-value client in a shared Slack channel. The client mentions a bug impacting them. With an LLM agent, the customer support person can flag it instantly.

Prompt:

Create a new customer Bill in Zendesk. Create a ticket for Bill. Bill is complaining our API is not working since this morning. Also summarize the Slack chat and add it to the ticket.

Result: We can see that a Zendesk ticket for Bill is created. And the details problem description is automatically summarized from the Slack chat.

Use Case 2: Escalate Customer Bugs to Engineering in Seconds

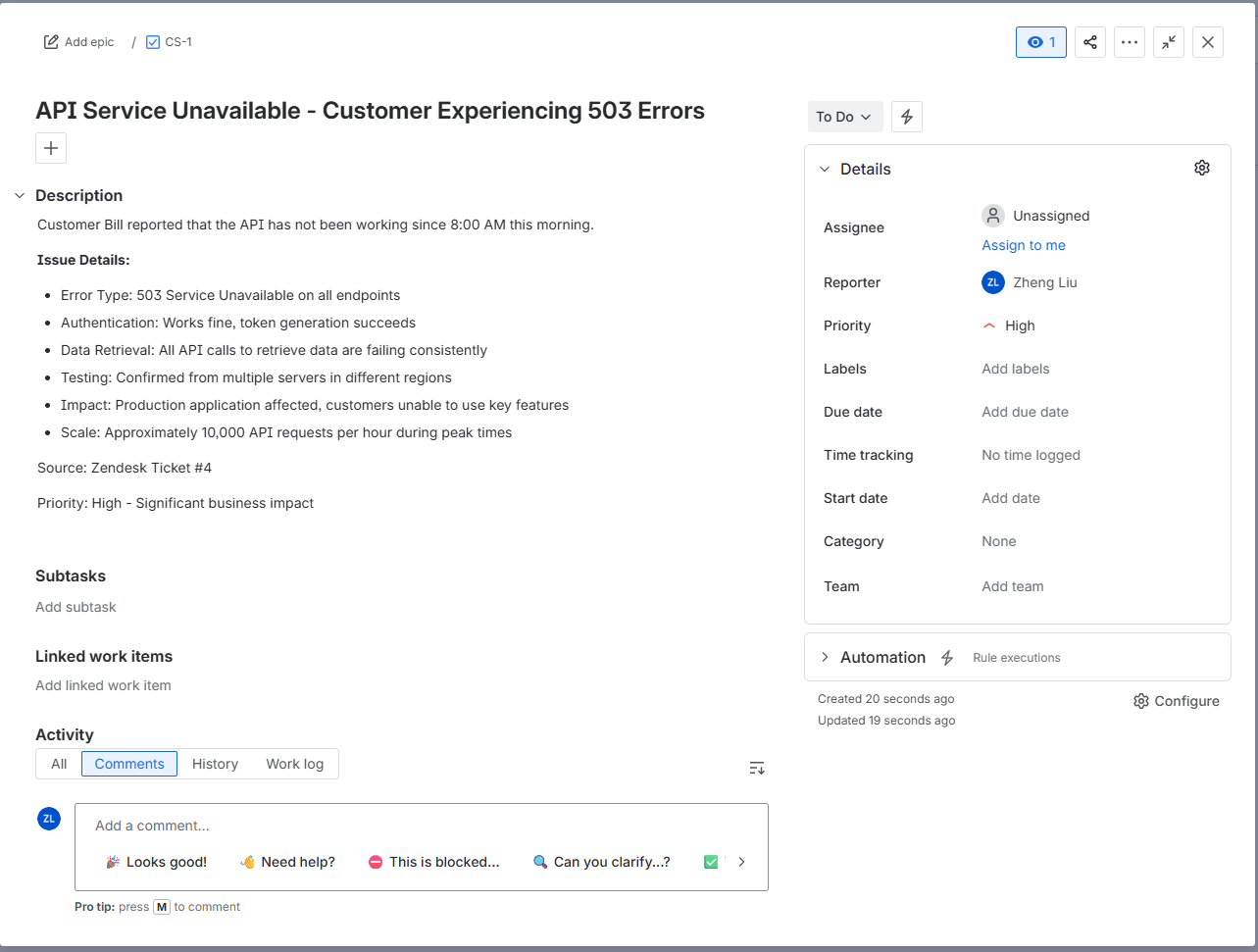

One of the most common—and manually intensive—workflows is escalating a customer-reported bug from the support team to the engineering team. This often involves tedious copy-pasting, which can lead to lost context and delays. This is a textbook problem solved by LLM-powered automation.

Scenario: A support agent confirms that a customer's issue is a genuine product bug. They need to create a Jira ticket so the engineering team can investigate.

Prompt:

Summarize the zendesk tickets for Bill, and create a ticket in Jira for Bill's requests.

Result: Zendesk ticket summary is generated, and a Jira ticket is created with all relevant details.

Use Case 3: Generate On-Demand Intelligence Reports

Support managers and product leads need to stay on top of trends. While dashboards are useful, they are often rigid. An LLM agent can act as a dynamic data analyst, answering natural language questions about your support operations by fetching and summarizing live data.

Scenario: A product manager wants to understand the most pressing issues that customers faced over the past week to prepare for a planning meeting.

Prompt:

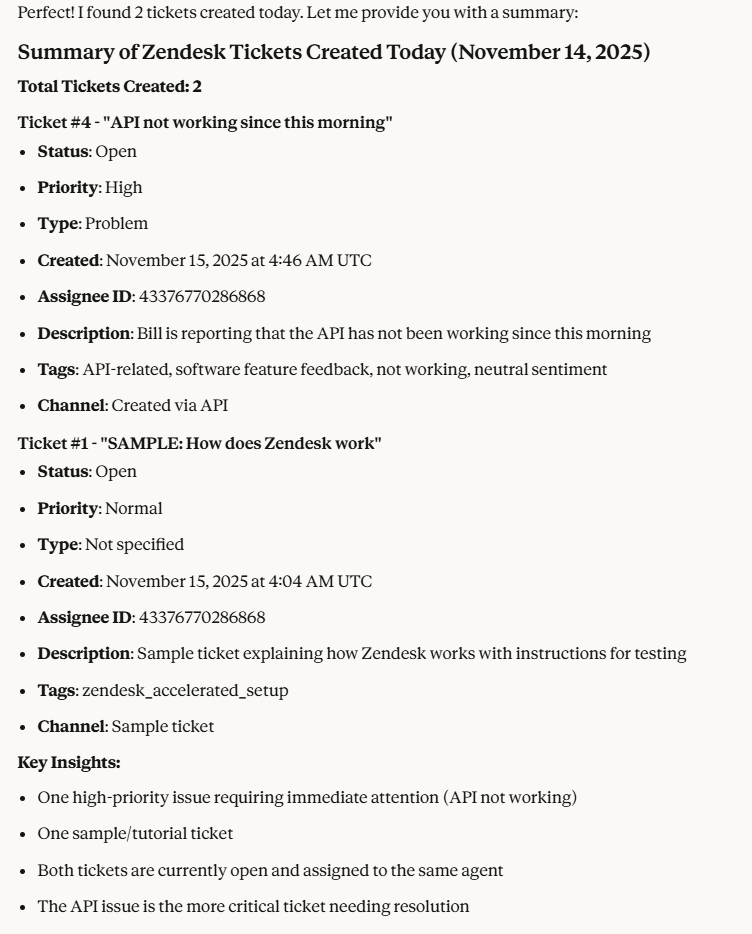

Summarize the tickets we received today.

Result: Summary report generated by the LLM agent, highlighting key issues.

Frequently Asked Questions (FAQs)

1. How is this different from a standard Zendesk chatbot?

Standard chatbots are primarily conversational. They follow scripts or use basic NLP to answer questions from a knowledge base. An LLM agent connected via MCP is transactional. It has access to tools and can perform complex, multi-step actions across different applications (like processing a refund or creating a Jira ticket), not just provide information.

2. Is it secure to give an LLM access to our internal tools?

Security is paramount. This is another area where using a managed MCP server infrastructure like Klavis AI provides a major advantage. Instead of managing API keys and credentials in your agent's code, you use a secure, multi-tenant system with built-in OAuth support and user-based access controls. The LLM agent operates within a sandboxed environment, inheriting only the permissions of the authenticated user, ensuring it can't access data or perform actions it isn't authorized for.

3. What's the learning curve for implementing this?

While the underlying technology is advanced, the goal of platforms like Klavis AI is to abstract away the complexity. For a developer, the process involves selecting pre-built MCP server integrations (like Zendesk or Jira), configuring them through a simple UI or SDK, and then focusing on what matters: writing clear, effective prompts to guide your AI agent. The heavy lifting of authentication, protocol management, and progressive tool discovery is handled for you.