Last time we talked about Workflow Automation Platforms for LLM Developers. This time, we're diving into the world of AI agent builders—frameworks that let you create intelligent agents capable of autonomous decision-making, multi-step reasoning, and tool use.

What Makes a Good AI Agent Builder?

Before we jump into specific platforms, let's talk about what separates genuinely useful agent builders from glorified chatbot wrappers.

The fundamentals every platform needs to nail:

- Memory and context management - Your agent needs to remember past interactions and maintain state across conversations

- Tool integration - Access to external APIs, databases, and services where your actual business logic lives

- Multi-step reasoning - The ability to break complex tasks into sequences of actions

- Error handling and recovery - Because things will go wrong, and your agent needs to handle it gracefully

- Observability - You need to see what your agent is thinking and why it made specific decisions

The best platforms give you these capabilities without forcing you to reinvent the wheel every time.

Platform Comparison: What You're Actually Getting

Here's a breakdown of the seven platforms we tested, organized by their core strengths:

| Platform | Best For | Free Tier | Learning Curve | Production Ready |

|---|---|---|---|---|

| CrewAI | Multi-agent coordination | Open source | Medium | Yes |

| LangChain | Custom agent workflows | Open source | High | Yes |

| Dify | Rapid prototyping | Open source | Low | Yes |

| AutoGPT | Autonomous research tasks | Open source | High | Experimental |

| Flowise | Visual LLM app building | Open source | Low | Yes |

| Botpress | Conversational agents | 500 LLM credits/mo | Low | Yes |

| LlamaIndex | Data-intensive applications | Open source | Medium | Yes |

The AI agent market will reach $47 billion by 2030, which explains why every workflow tool suddenly has "agent capabilities." But not all implementations are created equal.

1. CrewAI: When Your Agents Need to Work as a Team

CrewAI takes a fundamentally different approach than most frameworks. Instead of building one super-agent that tries to do everything, you create a team of specialized agents, each with defined roles and responsibilities—like organizing a real software team with a researcher, writer, and editor.

Pros:

- Natural workflow for complex, multi-step tasks that mirror how human teams actually work

- Framework is free and open source, with enterprise features and LLM costs paid separately

- Excellent documentation with AI-powered search and a DeepLearning.AI course

Cons:

- The multi-agent paradigm adds complexity—overkill for simple tasks

- Can get expensive quickly if you're not careful about LLM usage across multiple agents

- Less mature than LangChain in terms of edge-case handling

When to use it: Content pipelines, research workflows, or any task that naturally breaks into distinct roles. Not ideal for simple question-answering or single-purpose bots.

2. LangChain: Maximum Control, Maximum Complexity

LangChain is the Swiss Army knife of agent frameworks—one of the most widely adopted platforms for AI agents. It pioneered the modular approach to AI development that nearly every other framework has copied, giving you granular control over every aspect of your agent's behavior.

Pros:

- You can chain steps, switch memory types, and plug into APIs or vector databases with ease, with ever-growing community support

- Massive ecosystem of integrations and community-built components

- LangGraph extension provides precise control over complex, stateful workflows

- Free and open source with commercial backing from LangChain Inc.

Cons:

- Depth comes with complexity—with so many moving parts, it can take time to find the right abstraction for your use case

- Documentation can lag behind feature releases

- Significant learning curve before you're productive

When to use it: Production systems that need fine-grained control, or specific requirements that pre-built solutions can't handle. Not recommended if you just want to ship something quickly.

3. Dify: The No-Code Speed Demon

Dify is an open-source platform that prioritizes getting LLM applications deployed fast, making it accessible to non-technical users by combining Backend-as-a-Service and LLMOps concepts. It's particularly strong for teams that need to iterate quickly on agent behavior without diving into code.

Pros:

- Extremely fast to get started—minutes to first working agent

- No coding required for basic implementations

- Open source with cloud hosting options

- Good balance of simplicity and capability

Cons:

- Less customization compared to AutoGen, LangChain, or Pydantic AI

- Newer platform with smaller community than established frameworks

- May hit limitations as complexity grows

When to use it: Early-stage product validation, internal tools, or when non-technical team members need to build agents. Not for applications requiring deep customization or complex orchestration.

4. AutoGPT: Autonomous Agents That Keep Digging

AutoGPT is the wild child of AI agents—incredibly ambitious but still experimental for production use. Give it a goal, and it'll break it down into subtasks, execute them, learn from the results, and keep iterating until it succeeds (or runs out of tokens).

Pros:

- Truly autonomous—can work independently on complex, multi-step tasks

- Open source with active development community

- Great for research and experimentation with agent capabilities

Cons:

- Can burn through API credits extremely fast with its trial-and-error approach

- Prone to getting stuck in loops or going off track

- Requires significant prompt engineering and guardrails for reliable results

- Not recommended for production systems where predictability matters

When to use it: Research projects, exploring agent capabilities, or tasks where you have time and budget for extensive experimentation. Avoid for customer-facing applications or anything time-sensitive.

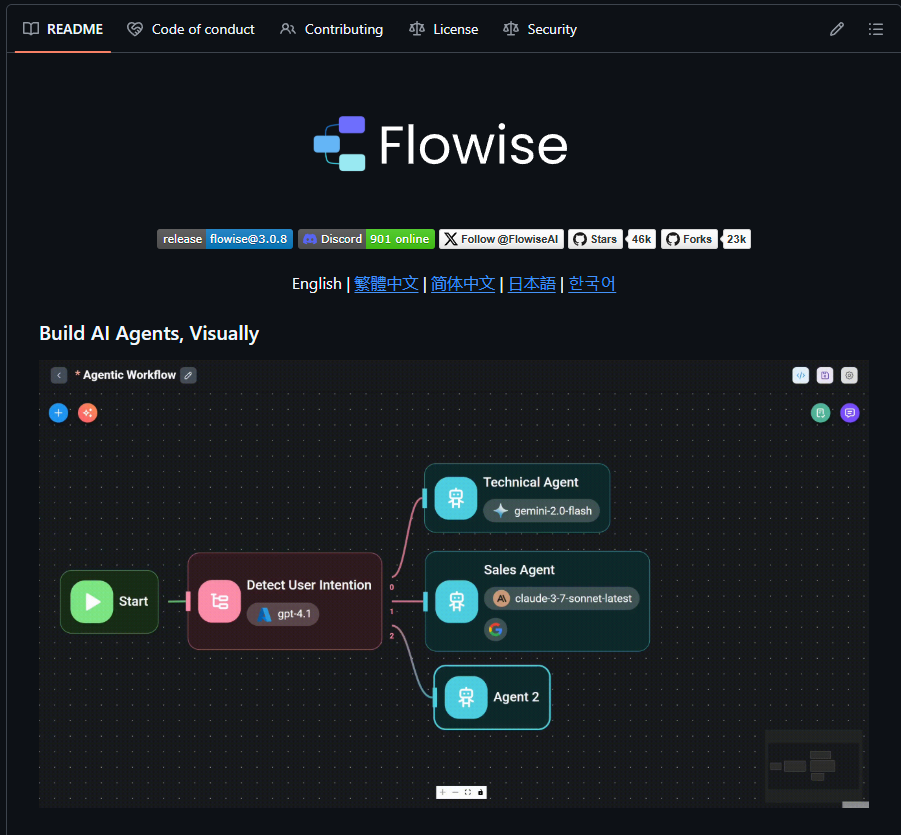

5. Flowise: The Fastest Way to Prototype LLM Apps

Flowise is an open-source visual builder for LLM chains and AI agents, offering a drag-and-drop interface that makes it the fastest path from idea to working prototype. It's best for teams experimenting with AI chatbots, retrieval workflows, and early-stage prototypes.

Pros:

- Intuitive drag-and-drop builder for chaining LLM steps, with strong community momentum

- Exceptional ease of use for AI-centric workflows with rapid prototyping and deployment

- Built on LangChain, so you get access to that ecosystem

- Self-hostable for data privacy—free if you host it yourself

Cons:

- Less suited for general automation without broad SaaS connectors

- Reliability and governance features are light compared to enterprise tools

- Limitations in the free tier with scalability costs for cloud hosting

When to use it: Rapid prototyping, proof-of-concepts, or when your primary focus is LLM-based applications. Not ideal for production systems requiring enterprise-grade reliability.

6. Botpress: Conversational AI Without the Complexity

Botpress specializes in conversational AI and chatbots, offering a polished developer experience with generous free tiers. It combines a visual flow editor with natural language understanding, custom actions, and integrations—particularly strong for customer-facing applications.

Pros:

- Visual flow editor where each node handles focused tasks with memory, conditions, and tool connections

- Free tier includes limited LLM credits, with paid plans differing by workspace limit and AI spend

Cons:

- Best suited for structured, conversational bots with clear user flows—less flexible for general agent tasks

- Can feel constraining if you need to go beyond conversational workflows

- Some advanced features require paid plans

When to use it: Customer support bots, internal help desk agents, or any conversational interface. Skip it for complex multi-step workflows that don't fit a chat paradigm.

7. LlamaIndex: Data-Intensive Agent Applications

LlamaIndex specializes in data integration and retrieval, making it ideal for applications needing robust indexing solutions. It's particularly strong when your agent needs to work with large amounts of unstructured data or implement sophisticated retrieval patterns.

Pros:

- Excellent for RAG applications and document search

- Supports numerous data sources and formats out of the box

- Open source with strong community

- Works well with other frameworks like LangChain

Cons:

- Learning curve for advanced indexing strategies

- May be overkill if you don't have complex data retrieval needs

- Requires understanding of vector databases and embeddings

When to use it: Building agents that need to search through large knowledge bases, handle document-heavy workflows, or implement sophisticated RAG patterns. Not necessary for simple conversational agents without data retrieval needs.

Choosing the Right Platform: A Decision Framework

After building dozens of agents across these platforms, here's how to actually make the choice:

Start with these questions:

What's your team's technical depth?

- Junior developers or non-technical team → Dify, Botpress, or Flowise

- Experienced developers comfortable with code → LangChain, CrewAI

- Mix of both → Platforms with visual builders and code flexibility

What's your use case complexity?

- Simple conversational agent → Botpress

- Multi-step workflows with branching logic → LangChain, Dify

- Collaborative multi-agent systems → CrewAI

- Highly autonomous research tasks → AutoGPT (with caution)

- Data-intensive retrieval → LlamaIndex

How important is data control?

- Critical (healthcare, finance, etc.) → Self-hosted options: LangChain, Flowise, Dify

- Less critical → Cloud-hosted options acceptable

What's your integration landscape?

- Need to connect to many business tools → Any framework + Klavis AI for MCP-based connectivity

- Primarily LLM-focused → Flowise, Dify

- Custom internal systems → LangChain for maximum flexibility

What's your timeline?

- Proof of concept in days → Dify, Flowise, Botpress

- Production system in weeks → CrewAI, LlamaIndex

- Complex custom solution over months → LangChain

The Integration Challenge: Connecting Agents to Real Data

Here's the dirty secret: most agent builders focus on the agent itself but leave you scrambling to connect to your actual business systems. You end up writing custom integrations for Slack, Gmail, your CRM, your database—all the places where your real work happens.

This is where the Model Context Protocol (MCP) becomes game-changing. Introduced by Anthropic in November 2024 and adopted by OpenAI and Google DeepMind, MCP provides a standardized way to connect AI agents to external tools and data sources—think USB-C for AI integrations.

Instead of building separate connectors for GitHub, Slack, Salesforce, and dozens of other services, you write one integration against the MCP standard. MCP servers expose tools through a unified protocol that works across all your agent frameworks.

This is where Klavis AI comes in. They provide production-ready MCP servers for 70+ popular business tools with built-in OAuth support, so you don't have to build and maintain authentication for every service. Whether you're building with CrewAI, LangChain, or any other framework, you can plug into Klavis AI's hosted MCP infrastructure and immediately access integrations for:

- Productivity: GitHub, GitLab, Linear, Jira, Confluence, Notion, ClickUp, Asana

- Communication: Slack, Discord, Gmail, Microsoft Teams, WhatsApp

- Business: Salesforce, HubSpot, Airtable, Close, Pipedrive

- Cloud Storage: Google Drive, Dropbox, OneDrive, Box

- And many more services

Klavis AI has direct collaborations with platforms like CrewAI and LlamaIndex, making integration particularly seamless if you're building on those platforms. Instead of spending weeks on OAuth flows and API quirks, you can focus on building the agent logic that actually differentiates your product.

Frequently Asked Questions

Q: Can I switch agent frameworks later if my needs change?

Yes, but with caveats. Your agent logic (prompts, reasoning patterns) is usually transferable, but framework-specific features like memory implementations or tool calling patterns may require refactoring. Using MCP for external integrations helps—your connection layer stays consistent even if you change the underlying agent framework.

Q: Do I need to use OpenAI, or can I use open-source models?

All the platforms mentioned support multiple LLM providers. You can use OpenAI, Anthropic, local models via Ollama, or open-source models from Hugging Face. However, smaller models may struggle with complex agent behaviors that require strong reasoning capabilities.

Q: How much does it actually cost to run an AI agent in production?

Highly variable depending on usage. A conversational agent handling 1,000 interactions per day might cost $50-200/month in LLM API fees. Complex multi-agent systems with extensive tool use can easily hit thousands per month. Start with usage monitoring and implement caching aggressively.

Q: Are these platforms secure enough for enterprise use?

It depends on your deployment model. Self-hosted options like LangChain, Flowise, and Dify give you complete control over data. For hosted solutions, check SOC 2 compliance and data residency options. For highly regulated industries, self-hosting with proper security hardening is generally recommended.