Something's breaking in the IT automation world. The tools we've relied on for years:

- Chef for infrastructure as code;

- Zapier for connecting apps;

- UiPath for process automation.

still work great when humans are calling the shots. But throw an AI agent into the mix? Things fall apart fast.

It's not that these tools are bad. They're excellent at what they were designed for. The problem is simpler: they were built for people, not for machines that need to think and make decisions.

Traditional IT automation excels at running predefined workflows. Click this, then do that. If X happens, trigger Y. But AI agents don't work that way. They get natural language instructions and figure out which tools to use on the fly. They need to discover options, evaluate paths, and execute across dozens of services without a pre-programmed script.

That's where everything gets messy.

Enter the Model Context Protocol. Think of it less as an upgrade and more as a rethinking of how systems should connect when AI is involved. It's similar to how REST APIs replaced SOAP—not incrementally better, just fundamentally different.

Let's dig into why this matters and what's actually changing.

Traditional IT Automation: Great for Humans, Awkward for Agents

For the last decade, IT automation has meant a few specific things:

| Category | Example Tools | What They Do | How They Connect | Who Uses Them |

|---|---|---|---|---|

| Infrastructure | Chef, Ansible | Provision and configure servers | SSH, APIs | DevOps teams |

| Workflow | Zapier, Power Automate | Connect apps, automate processes | REST APIs, webhooks | Business analysts |

| Enterprise | UiPath, ServiceNow | End-to-end business automation | UI automation, native APIs | Enterprise IT |

| Job Scheduling | ActiveBatch, Airflow | Run batch jobs, ETL pipelines | Scripts, APIs | Data engineers |

All of these work brilliantly for their intended purpose. But none were designed for AI agents.

Why AI Agents Break Traditional Automation

AI agents operate differently than traditional automation. You give them a goal in plain English, and they figure out the steps. This creates three big problems with existing tools.

Problem 1: Integration Hell

Every platform needs its own authentication setup, API client, and error handling.

Want your AI agent to work with GitHub, Slack, Notion, and AWS? You're writing custom integration code for each one. Different SDKs. Different auth flows. Different rate limits and error responses.

Teams building AI coding assistants report spending 60-70% of their time on integration plumbing instead of actually building the agent logic. That's backwards.

Problem 2: Tool Overload

Traditional approaches dump everything into the AI's context window at once. The entire API spec for every connected service. Every endpoint, every parameter, every auth method.

This kills performance fast.

Research shows that giving an LLM access to 46 tools caused complete failure, even though the context was well within its window. When researchers reduced it to just 19 tools, it succeeded. The issue wasn't context length—it was context complexity.

Studies demonstrate that model correctness begins to fall significantly when tool counts exceed 30-40, with accuracy degrading by 30-40% as agents struggle to navigate irrelevant options.

Problem 3: Authentication Chaos

Traditional tools authenticate as a single user or service account. AI agents need to act on behalf of multiple users, each with different permissions across different services.

Building OAuth flows, managing token refresh, handling permission errors—it becomes a massive engineering project. And you have to do it separately for every service you integrate.

Model Context Protocol: A Different Architecture

MCP started from a basic observation: AI models need a standardized way to talk to external systems.

Just like HTTP standardized web communication. Or JDBC standardized database access.

How MCP Works

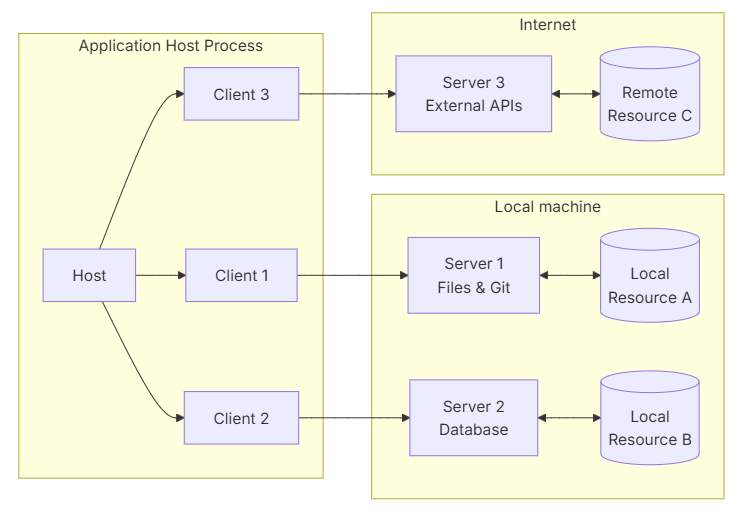

The architecture is straightforward. Client-server model built on JSON-RPC 2.0.

MCP Servers expose three things:

- Resources: Data sources (files, database records, API responses)

- Tools: Functions the AI can invoke

- Prompts: Reusable templates for common operations

MCP Clients live inside AI applications—chatbots, IDE extensions, autonomous agents. They talk to servers through a standard protocol.

Transport Layer supports both local connections (STDIO) and remote ones (HTTP with Server-Sent Events). This flexibility matters for different deployment scenarios.

Pretty simple. But the real magic happens in how AI agents discover and use tools.

Progressive Discovery: The Tool Overload Solution

The MCP market is projected to hit $10.3 billion by 2025, growing at a CAGR of 34.6%. One of the key innovations emerging is progressive discovery.

Instead of showing the AI every available function upfront, you reveal tools hierarchically:

- Intent Recognition: AI figures out what the user wants ("manage GitHub issues")

- Category Navigation: System shows relevant categories (Development → GitHub → Issues)

- Action Selection: Specific operations appear (create_issue, update_issue, search_issues)

- Execution: Full API details show up only when the AI commits to an action

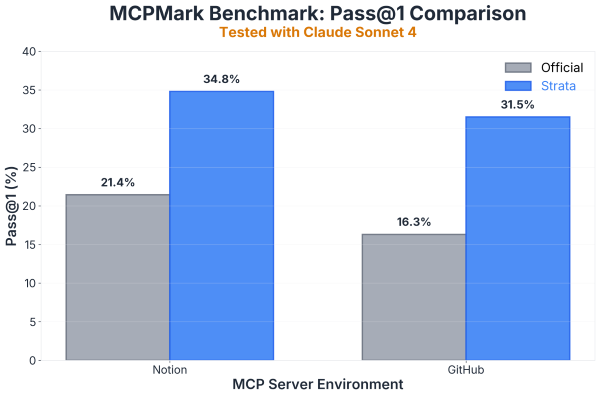

This isn't theoretical. Klavis AI's Strata implemented progressive discovery and ran benchmarks against standard MCP servers. Results showed +15.2% higher success rates for GitHub official MCP servers and +13.4% improvement for Notion official MCP servers. On complex multi-app workflows, success rates hit 83%+.

Those numbers matter when you're shipping production AI agents.

Multi-Tenancy That Actually Works

Unlike traditional automation built for single users, production MCP servers handle authentication for multiple users simultaneously:

from klavis import Klavis

klavis = Klavis(api_key="your-api-key")

# Create isolated MCP server instance for specific user

user_server = klavis.mcp_server.create_strata_server(

user_id="developer_123",

servers=["github", "linear", "slack"]

)

# User's OAuth tokens are managed automatically

# AI agent inherits user's permissions across all connected services

When your AI agent creates a GitHub issue or sends a Slack message, it does so with the right user's credentials and permissions. No shared service accounts. No permission escalation risks.

This is how Klavis AI handles multi-tenancy for their MCP servers, making it practical to deploy AI agents in real organizations where different people have different access levels.

Real-World Example: Before and After MCP

Let's look at an actual scenario. Engineering operations automation.

The Old Way

A company used Zapier to connect Jira, GitHub, and Slack for release management:

- Jira webhook triggers → Zapier creates GitHub branch

- GitHub PR merged → Zapier updates Jira ticket

- Status change → Zapier posts to Slack

They needed 12 separate Zaps with complex filtering logic. Every time an API changed, they updated multiple workflows. Maintenance was constant.

The MCP Way

An AI agent with MCP servers for Jira, GitHub, and Slack gets a natural language instruction:

"Create a new feature branch for JIRA-123, open a PR with the changes,

and notify the #engineering channel when it's ready for review."

The agent handles it:

- Queries Jira MCP server for ticket details

- Calls GitHub MCP server to create branch and PR

- Invokes Slack MCP server to send notification

Authentication, error handling, retry logic—all handled by the MCP layer. When GitHub's API changes, only the MCP server needs updating. Not the agent logic.

The Comparison

| Dimension | Traditional Automation | MCP-Based Agent |

|---|---|---|

| Setup Time | 2-4 weeks per integration | 2-10 minutes per service |

| Authentication | Manual OAuth implementation | Built-in OAuth support |

| Maintenance | Update each workflow when APIs change | Update MCP server only |

| Flexibility | Fixed workflows need reconfiguration | Adapts to natural language |

| Error Handling | Manual retry and fallback logic | Automated exponential backoff |

| Multi-User Support | Complex token management | Native multi-tenancy |

| Typical Tool Count | 3-8 connected services | 20-50+ available services |

Efficiency Numbers

Organizations implementing MCP-based automation report:

- 60-70% reduction in time spent on integration code

- 80% fewer integration-related support tickets

- Time to add new service: From 2-3 weeks down to 30 minutes

- 30% reduction in context window usage through progressive discovery

Over 70% of companies implementing standardized AI infrastructure have adopted MCP. Organizations report an average 25% performance increase in their AI application capabilities.

When to Use Which Approach

Not everything needs to be AI-powered. Here's a practical decision framework:

Stick with Traditional Automation When:

- Workflows are completely deterministic (no decision-making needed)

- You're connecting fewer than 5 services

- Human operators manually trigger all automations

- You're in heavily regulated environments requiring complete explainability

Move to MCP-Based Automation When:

- Building AI agents that decide which tools to use

- Connecting more than 5-10 external services

- Need authentication on behalf of multiple end users

- Automation logic changes frequently based on context

- Want to reduce integration maintenance overhead

Many teams run both. Traditional automation for infrastructure provisioning and job scheduling. MCP for AI agent functionality and dynamic workflows.

It's not either-or. It's picking the right tool for the job.

Getting Started with MCP

Here's a minimal example of connecting an AI agent to multiple services:

from anthropic import Anthropic

from klavis import Klavis

# Initialize MCP infrastructure

klavis = Klavis(api_key="your-api-key")

anthropic = Anthropic(api_key="your-anthropic-key")

# Create MCP server with multiple integrations

mcp = klavis.mcp_server.create_strata_server(

user_id="engineer_001",

servers=["github", "linear", "slack", "notion"]

)

# AI agent receives tools through MCP

messages = [{

"role": "user",

"content": "When PR #247 is merged, create a Linear ticket for QA testing and document the changes in Notion"

}]

response = anthropic.messages.create(

model="claude-4-5-sonnet",

tools=mcp.get_tools(), # Progressive discovery prevents tool overload

messages=messages

)

# Agent automatically navigates available tools and executes actions

# with proper authentication and error handling

Over 50 production-ready MCP servers are available as open-source implementations. GitHub, GitLab, Linear, Jira, Slack, Discord, Gmail, Notion, Google Drive, and more. Most take under 5 minutes to integrate.

Common Questions

Can MCP replace traditional IT automation tools completely?

No, and it shouldn't. Ansible and Chef are still the right choice for infrastructure provisioning where workflows are deterministic. MCP shines when AI agents need to dynamically choose tools based on context. Most teams run both—traditional automation for core infrastructure, MCP for AI agent functionality.

What happens when a service changes its API?

MCP servers abstract the underlying API. When GitHub updates their REST API, only the MCP server implementation needs updating. All AI agents using that server automatically get the update without code changes. Same concept as database drivers handling database engine updates.

Isn't running AI agents with multiple MCP connections expensive?

Progressive discovery cuts costs significantly. Instead of sending documentation for 50 tools on every request, agents only get relevant tool subsets. Teams report 30-50% reductions in token usage compared to dumping all tools at once. Hosted MCP services typically charge per API call executed, not per tool available.

Is this secure enough for enterprises?

MCP supports OAuth 2.0 authentication, user-level permission enforcement, and audit logging of all tool invocations. You can also run MCP servers within your own infrastructure for sensitive operations. The protocol is transport-agnostic, so you can implement it over encrypted channels. Block rolled out MCP company-wide with enterprise-grade security patterns.

Want to experiment with MCP-based automation? Talk to Klavis AI founders.