For years, platforms like Pipedream and Zapier have been the backbone of automation, stitching together disparate APIs with linear, event-driven workflows. However, the integration landscape shifted dramatically yesterday with the announcement that Workday has signed a definitive agreement to acquire Pipedream.

While this acquisition validates the critical importance of programmable infrastructure, it inevitably raises questions for the broader developer community regarding future roadmap priorities, ecosystem openness, and the longevity of developer-centric tiers. As Pipedream moves toward the enterprise ERP ecosystem, developers building the next generation of software are looking for independent, specialized alternatives.

Simultaneously, the rise of Agentic AI has exposed a technical limitation in traditional iPaaS (Integration Platform as a Service) models: Context. AI agents don't just need to trigger a linear sequence of events; they need to understand the tools available to them, query data dynamically, and reason about inputs and outputs in real-time.

This is where the Model Context Protocol (MCP) shifts the paradigm. While Pipedream has introduced adaptations, developers building robust, scalable AI applications are increasingly turning to native MCP servers. These platforms are designed specifically for Large Language Model (LLM) consumption, optimizing for token usage, security, and "agentic" reasoning rather than just static automation.

This guide explores the top Pipedream MCP alternatives, focusing on platforms that provide the infrastructure, security, and context optimization required for production-grade AI agents in this new era.

1. Klavis AI

Best For: Enterprise-grade infrastructure, token optimization, and complex agentic workflows.

Klavis AI stands out as the premier infrastructure platform for deploying and managing MCP servers. Unlike traditional integration platforms that simply wrap APIs, Klavis is engineered to solve the "context window explosion" problem that plagues agentic development.

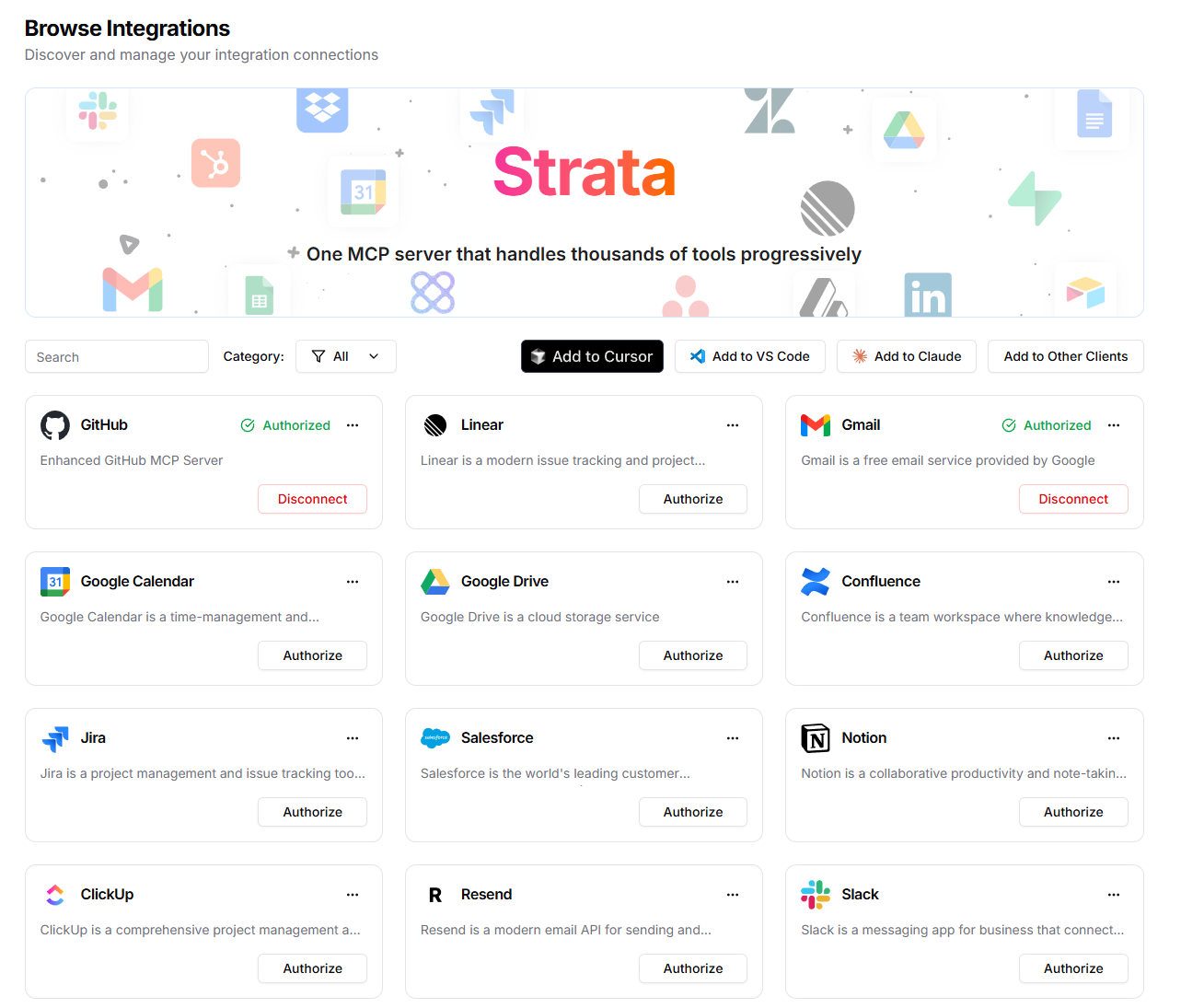

Through their flagship product, Strata, Klavis introduces a concept called Progressive Discovery. Instead of dumping thousands of tool definitions into an LLM's context window (which increases cost and hallucinations), Strata intelligently guides the agent from high-level intent to specific actions.

Key Advantages

- Hybrid Deployment (Hosted & On-Prem): Klavis AI offers unmatched flexibility by providing both fully managed hosted solutions and on-premise deployment options. Whether you need a zero-setup cloud endpoint for rapid integration or a secure, self-hosted instance for air-gapped enterprise environments, Klavis ensures seamless connectivity with any MCP client (including Claude Desktop, Cursor, and custom agents).

- Context Efficiency & Progressive Discovery: By revealing API details only when needed, Klavis significantly reduces token usage. This "just-in-time" context delivery prevents the agent from being overwhelmed by irrelevant tool schemas.

- Superior Success Rates: Benchmarks are critical in AI engineering. Klavis AI has demonstrated a +15.2% higher Pass@1 rate compared to the official GitHub MCP server and a +13.4% higher Pass@1 rate vs. the Notion MCP server.

- Enterprise Security: Security is often the biggest blocker for AI adoption. Klavis provides SOC 2 and GDPR compliance out of the box, along with built-in Role-Based Access Control (RBAC). This ensures that an AI agent can only access the data and tools it is explicitly authorized to use.

- Managed Authentication: Developers often struggle with OAuth token management for agents. Klavis includes built-in OAuth support, handling the complex "dance" of refreshing tokens and managing secure credentials so your agent doesn't have to.

Verdict: For developers building agents that need to reliably navigate complex tools without breaking the bank (or the context window), Klavis AI is the superior choice.

Website: https://klavis.ai/

2. Smithery.ai

Best For: Discovery and rapid deployment of community-built MCP servers.

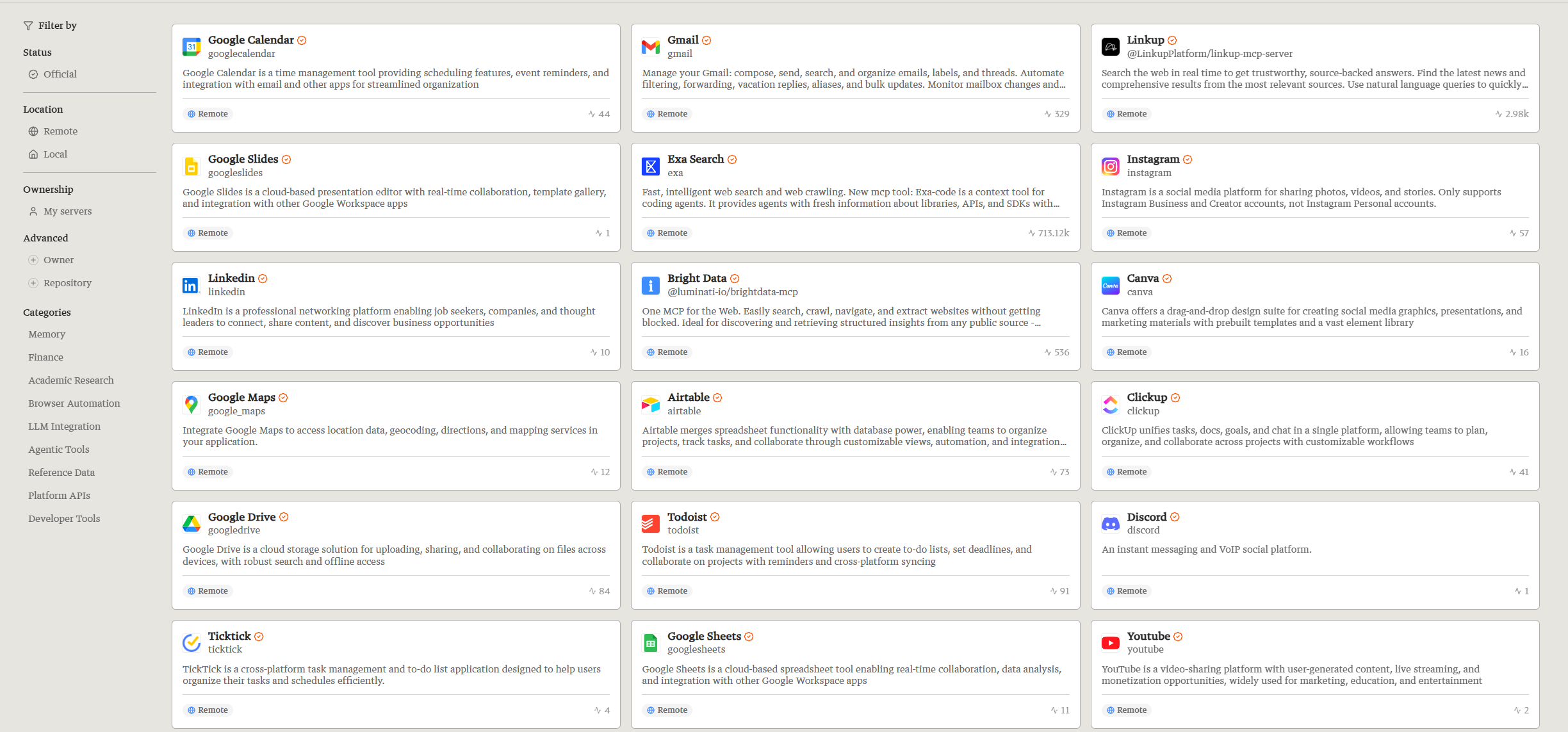

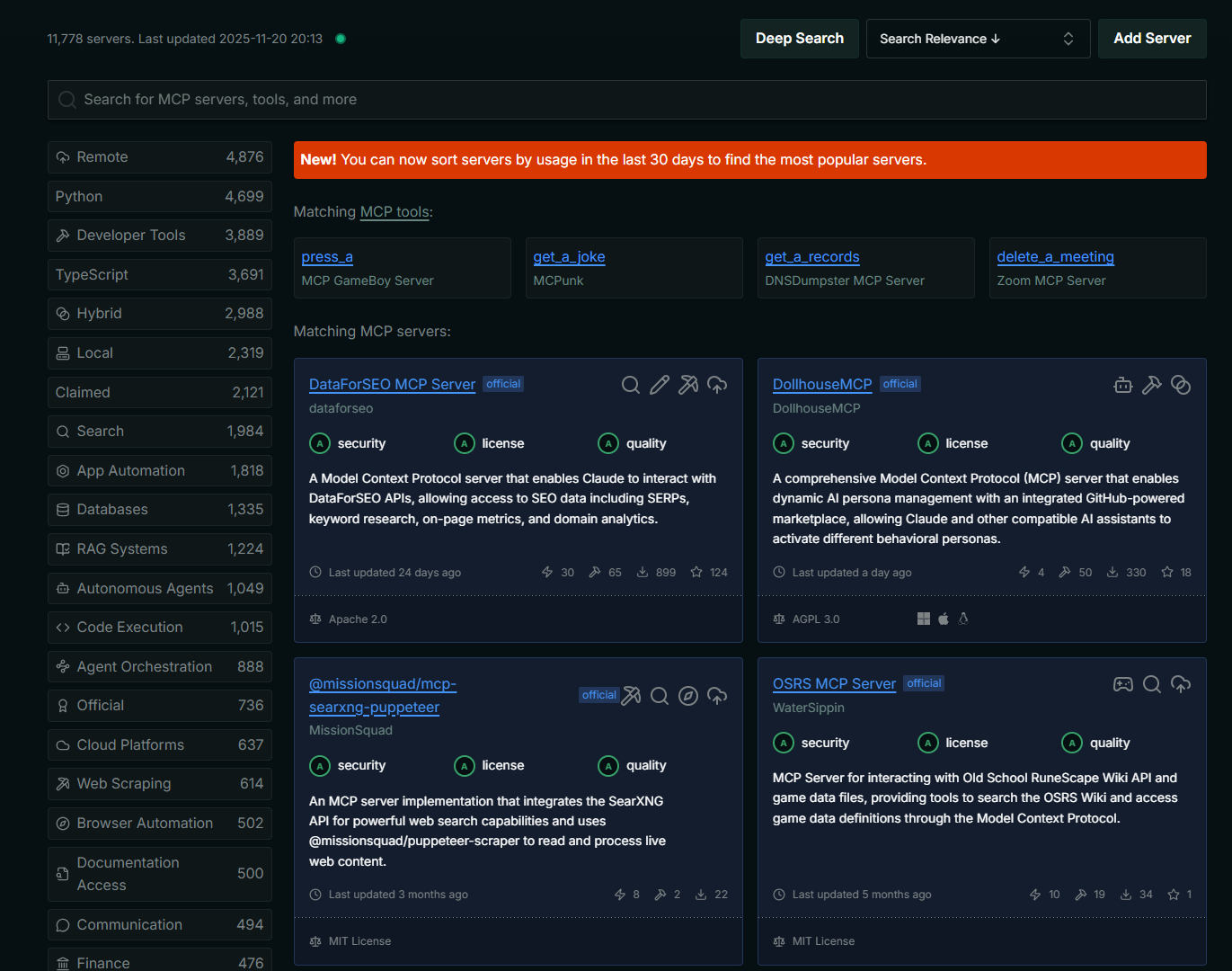

Smithery positions itself as the "App Store" for the Model Context Protocol. While Pipedream focuses on connecting APIs via workflows, Smithery focuses on a registry of ready-to-use MCP servers that can be deployed with a single command.

Key Advantages

- Massive Registry: Smithery hosts a vast collection of pre-built MCP servers for popular tools (Linear, Stripe, Postgres), allowing developers to "plug and play" capabilities into their agents like Claude or customized LLMs.

- Flexible Deployment: It supports both local hosting (great for testing with tools like Cursor) and remote hosting, offering flexibility depending on your infrastructure needs.

- Community Driven: As a hub for open-source contributions, Smithery often has the newest tool integrations available faster than proprietary platforms.

Verdict: A strong alternative for developers who want to quickly experiment with a wide variety of tools without building custom integrations from scratch.

Website: https://smithery.ai/

3. Composio

Best For: Broad integration libraries and managed authentication (Direct Pipedream Competitor).

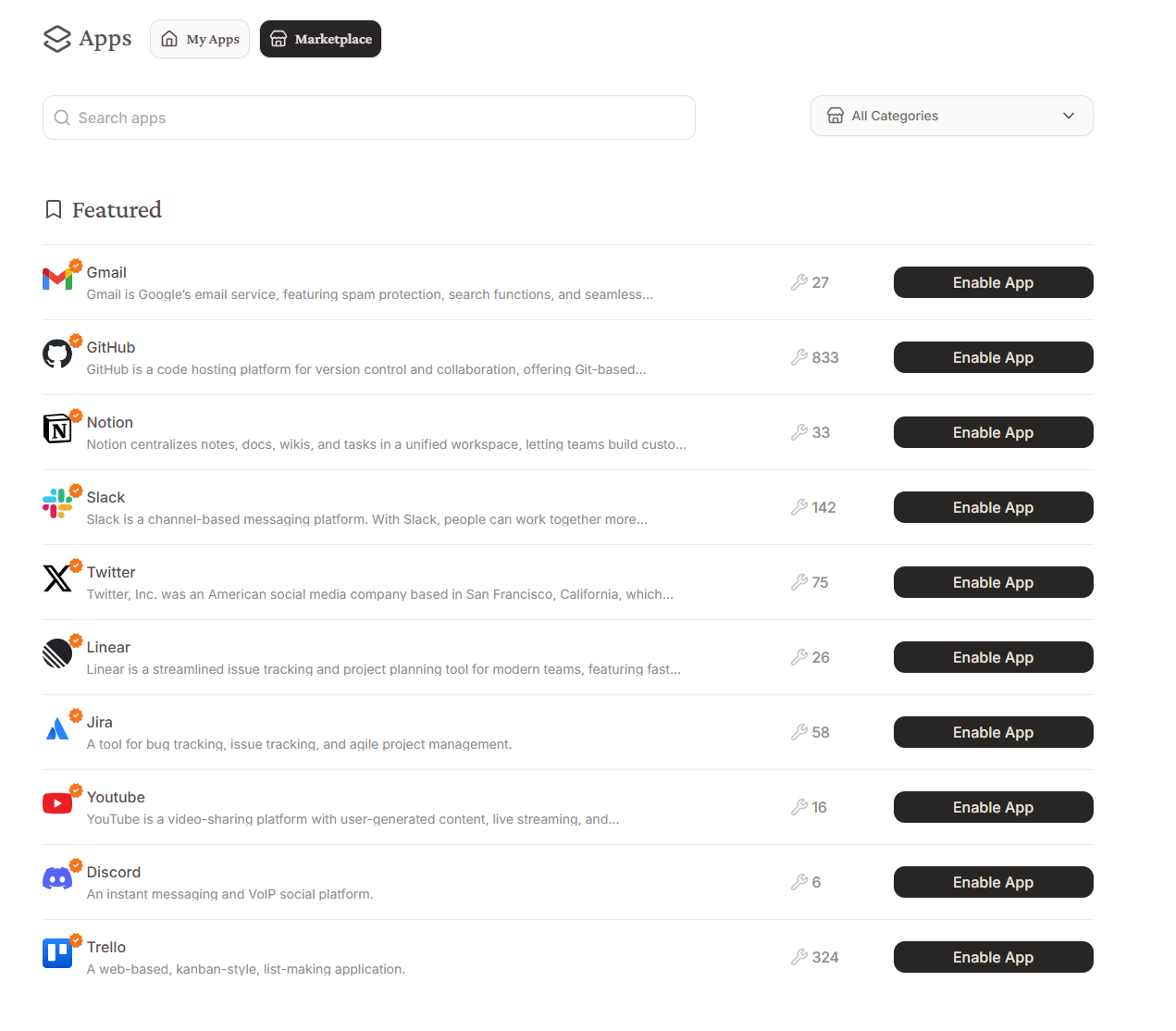

Composio is perhaps the closest direct functional alternative to Pipedream for the AI era. It focuses heavily on the "integration" layer, providing a massive library of connectors that are optimized for AI agents rather than static scripts.

Key Advantages

- Extensive Tooling: Composio boasts support for over 100+ tools, ranging from CRM systems like Salesforce to development tools like GitHub.

- Managed Auth (Auth0 for Agents): Similar to Klavis, Composio abstracts away the authentication layer. It handles the OAuth flows for individual users, allowing your agent to perform actions on behalf of a specific user securely.

- Agentic Focus: Unlike Pipedream's linear "Trigger -> Action" model, Composio exposes tools as functions that an LLM can reason about and call iteratively.

Verdict: If your primary pain point is simply "I need my agent to connect to X, Y, and Z immediately," Composio provides a comprehensive catalog similar to Pipedream but formatted for LLMs.

Website: https://composio.dev/

4. Glama

Best For: Documentation-first integration and client-side tooling.

Glama takes a unique approach by focusing on the interface between the developer's documentation and the AI's understanding. It is often used to turn technical documentation into actionable MCP servers.

Key Advantages

- Documentation as Code: Glama excels at parsing documentation and structured data to create MCP-compliant interfaces. This is particularly useful for internal APIs that may not have public integration libraries.

- Client Ecosystem: Glama provides a robust client interface that helps developers debug and visualize how their agents are interacting with MCP servers.

- Sandboxed Execution: It offers secure environments for testing tool use, ensuring that an agent doesn't accidentally execute destructive commands during the development phase.

Verdict: Ideal for teams that have custom internal APIs and comprehensive documentation that they want to expose to their AI workforce rapidly.

Website: https://glama.ai/

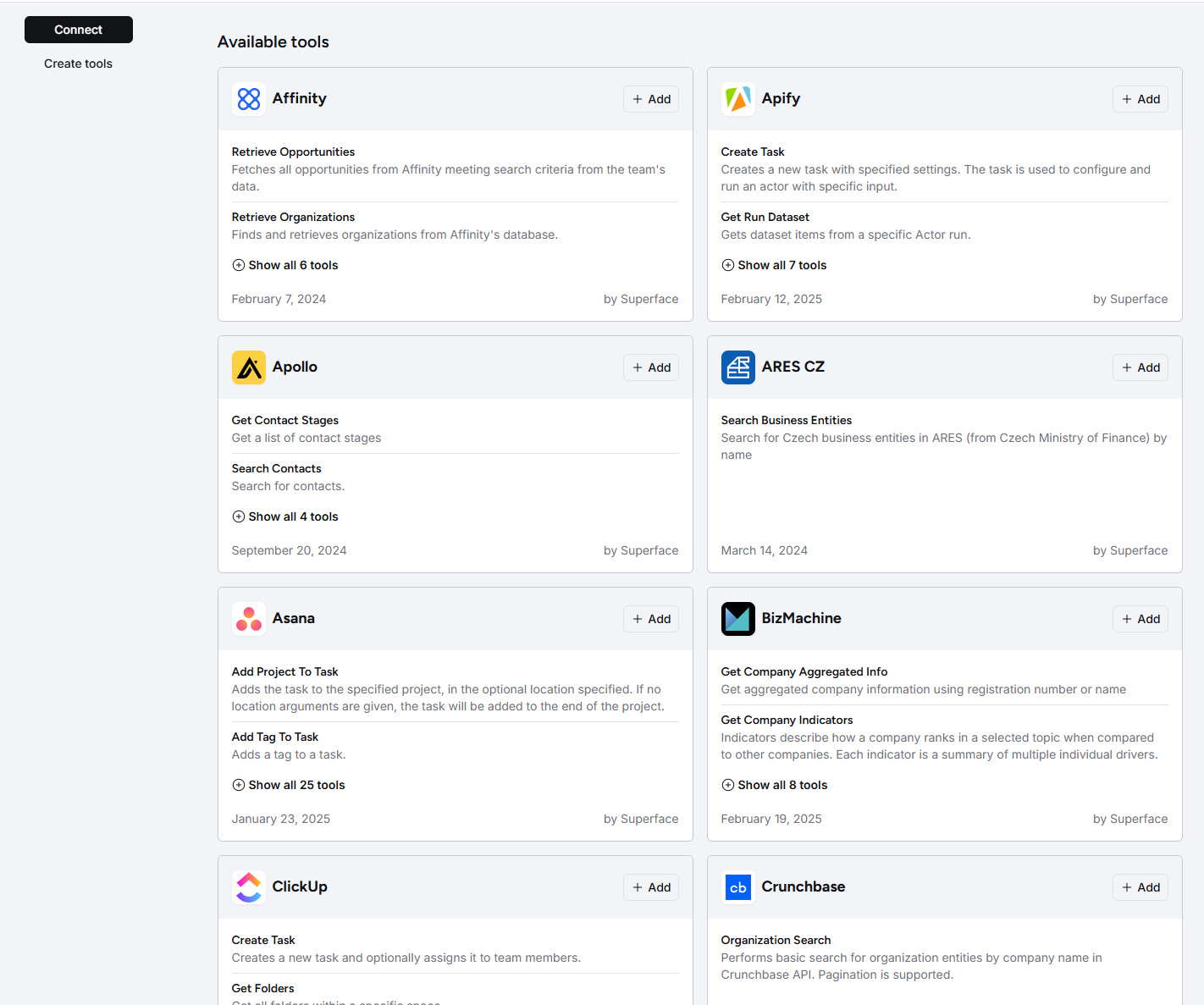

5. Superface

Best For: Resilient, self-healing integrations.

Superface predates the MCP hype but has aligned strongly with the protocol's goals. Their "One SDK" approach is designed to be resilient—if an underlying API changes, Superface aims to handle the adaptation without breaking the agent's workflow.

Key Advantages

- Decoupled Integrations: Superface separates the intent (what the agent wants to do) from the implementation (the specific API call). This abstraction layer makes agents more robust to API updates.

- Compser (AI-Driven Integration): They utilize AI to automatically map intents to API calls, speeding up the process of creating new MCP capabilities.

Verdict: A smart choice for mission-critical agents where API stability and maintenance overhead are major concerns.

Website: https://superface.ai/

FAQs

Does Pipedream support MCP?

Yes, Pipedream has released an MCP adapter that allows you to expose Pipedream workflows as tools to an LLM. However, dedicated MCP platforms like Klavis AI or Smithery are often preferred for production agents because they offer better latency, token optimization (context management), and security controls specifically designed for the unpredictable nature of LLM tool use.

Is the Model Context Protocol limited to specific AI models like Claude?

No, MCP is an open standard that is completely model-agnostic. While it was initially championed by Anthropic, it works with any Large Language Model (including OpenAI's GPT-4, Meta's Llama, or Mistral) as long as the "client" application (the software hosting the model) implements the protocol. This allows developers to build a single integration (the MCP server) that works across many different AI tools and agents.

Is SOC 2 compliance necessary for an MCP server?

If your AI agent interacts with customer data (CRMs, databases, emails), security is non-negotiable. An MCP server acts as a gateway to your infrastructure. Using a compliant provider like Klavis AI ensures that this gateway meets enterprise standards for data privacy and security controls, which is often a requirement for deploying agents in corporate environments.

How does on-premise MCP deployment benefit my workflow?

Deploying an MCP server on-premise (a feature supported by Klavis AI) allows you to keep sensitive data entirely within your private infrastructure. This is crucial for industries like finance or healthcare where regulatory compliance prohibits data from transiting through public cloud servers, even for simple tool calls.