Here's a problem every AI engineer runs into: you've built a smart agent that can analyze problems and suggest solutions, but when it comes to actually doing something useful—like posting updates in Slack or checking message history—you're stuck writing integration code, handling OAuth, and debugging API quirks.

The Model Context Protocol solves this. Instead of building Slack integration from scratch, you connect your AI agent to an MCP server that handles all the messy parts. Your agent gets access to 18+ Slack operations through a standardized interface, and you skip weeks of integration work.

Let's walk through exactly how to set this up, then look at three real-world use cases where this actually matters.

Getting Your AI Agent Connected to Slack

There are two ways to get started: through the web dashboard (fastest) or via API (more control). I'll show you both.

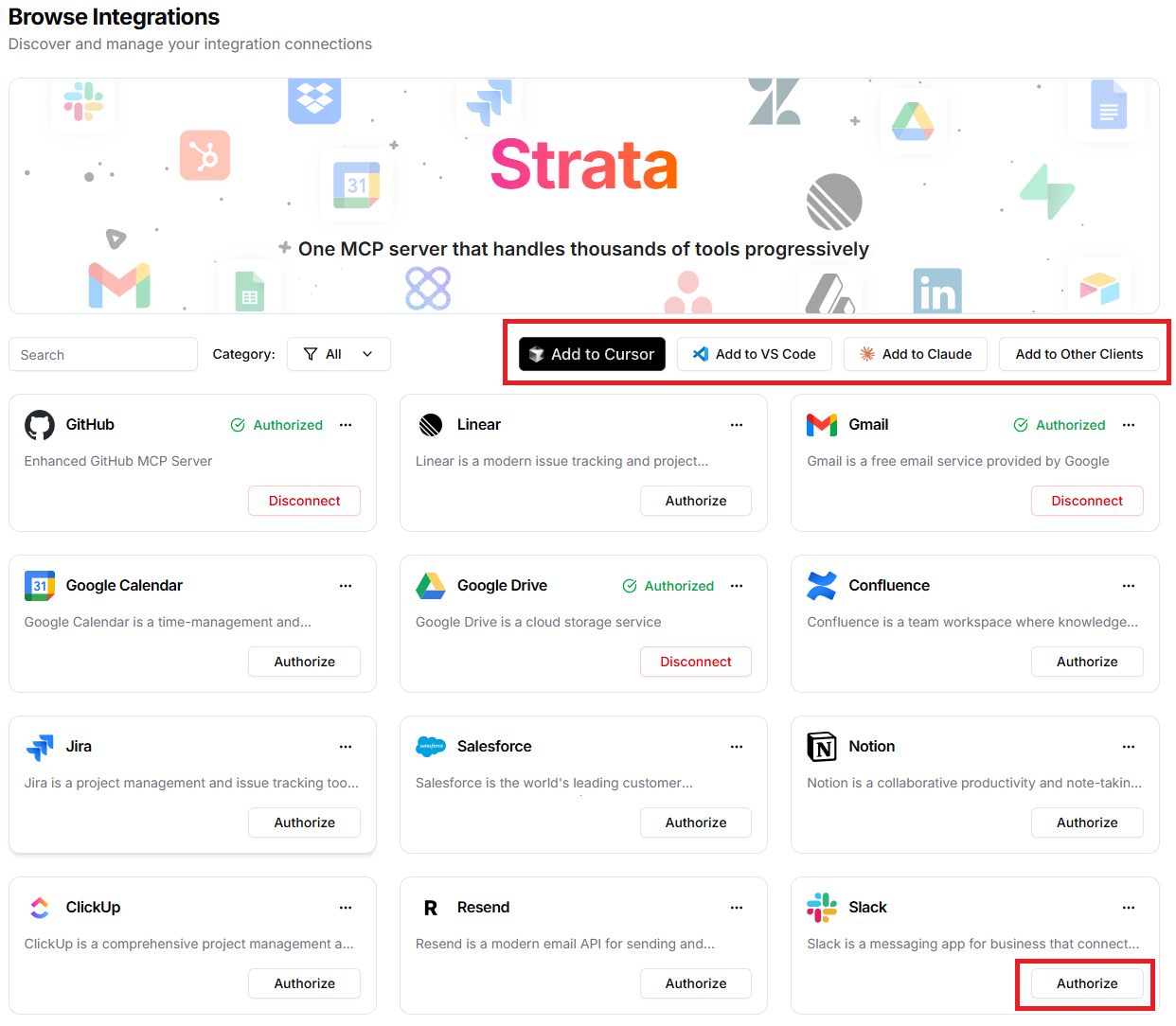

Option 1: Using the Dashboard (2 Minutes)

This is the quickest path if you just want to test things out or you're building a personal project.

Sign up to Klavis AI Create an account if you haven't already—takes about 30 seconds.

Select Slack from the integrations list

You'll see a bunch of available services. Click on Slack.

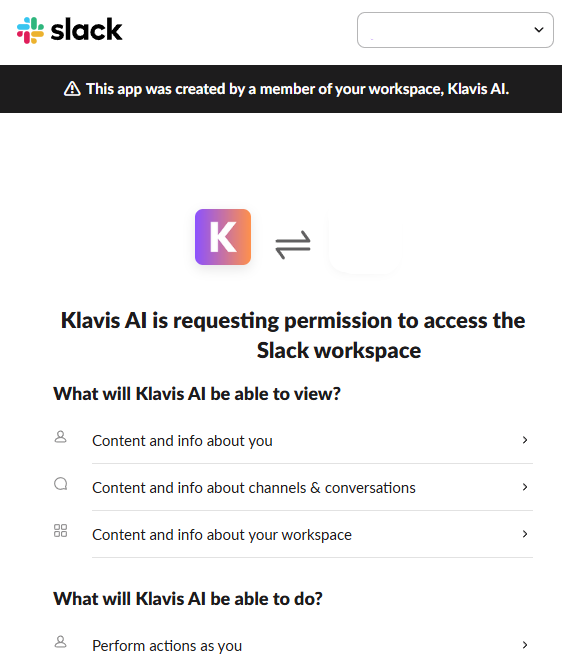

- Authorize your workspace

This opens a standard OAuth flow where you grant permissions. Your Slack admin settings determine what you can authorize, so if something's blocked, that's where to look.

- Add to your client or your AI agent

That's it. Your MCP server is running, authenticated, and ready. You can now add this endpoint to Claude Desktop, Cursor, VS Code, or your custom application.

Option 2: Programmatic Setup (API)

If you're building a product where users need to connect their own Slack workspaces, you'll want the API approach. This gives you control over the flow and lets you embed it in your application.

First, install the SDK:

pip install klavis

Then create an MCP server instance:

from klavis import Klavis

from klavis.types import McpServerName

client = Klavis(api_key="your_api_key")

response = client.mcp_server.create_strata_server(

servers=[McpServerName.SLACK],

user_id="user_123"

)

# Get the OAuth URL for this user

oauth_url = response.oauth_urls[McpServerName.SLACK]

Send your user to that oauth_url for authorization. Once they complete it, your MCP server is ready. The user_id parameter is important—it ties the Slack connection to a specific user in your system, so you can support multiple users each with their own workspace access.

The response includes your MCP endpoint URL. Your AI agent connects to this endpoint and can immediately start using Slack tools. For more details, check out the Klavis API docs.

What You Get: Available Tools

Your MCP server exposes 18 tools for Slack operations:

| Tool | What It Does |

|---|---|

slack_send_message | Post messages to channels |

slack_list_messages | Pull message history from a channel |

slack_get_message | Retrieve a specific message by timestamp |

slack_update_message | Edit an existing message |

slack_delete_message | Remove a message |

slack_list_channels | Get all channels in the workspace |

slack_get_channel_info | Details about a specific channel |

slack_create_channel | Make a new channel |

slack_archive_channel | Archive a channel |

slack_set_channel_topic | Update channel topic |

slack_invite_user_to_channel | Add someone to a channel |

slack_kick_user_from_channel | Remove someone from a channel |

slack_list_users | Get all workspace members |

slack_get_user_info | Details about a specific user |

slack_add_reaction | React to a message with an emoji |

slack_remove_reaction | Remove an emoji reaction |

slack_upload_file | Upload files to Slack |

Your AI agent can call any of these through the MCP protocol. The LLM decides which tool to use based on what it's trying to accomplish, and the MCP server handles execution.

Real-World Use Cases

Incident Response That Doesn't Require Humans to Coordinate

Production incidents are chaos. Someone notices an error spike, creates a Slack channel, tries to figure out who should be there, posts an update, realizes they forgot to invite the database person, sends another message...

An AI agent connected to Slack MCP can handle the entire coordination layer.

Here's what it looks like in practice:

Your monitoring system detects API latency crossing a threshold. Instead of just posting "ALERT: API Latency High" to #incidents, your AI agent:

- Creates a new channel:

#incident-2025-10-14-api-latency - Invites the on-call backend and infrastructure engineers

- Posts a structured initial report with graphs and metrics

- Sets the channel topic with incident ID and severity

- Monitors for acknowledgments—if no one responds in 5 minutes, escalates

- Continues posting updates as it gathers information from other systems

- Archives the channel when resolved and generates a postmortem template

The engineers focus on fixing the problem. The agent handles everything else.

One team running this setup told me their mean time to resolution dropped by 40% because they eliminated the "who's supposed to be in this channel?" and "did anyone see this?" delays. The agent doesn't get confused, doesn't forget to update stakeholders, and doesn't need to context-switch between tools.

Key tools for this use case:

slack_create_channel- Spin up incident rooms instantlyslack_invite_user_to_channel- Assemble the right teamslack_send_message- Post updates throughout the incidentslack_set_channel_topic- Keep everyone orientedslack_list_messages- Check for acknowledgmentsslack_upload_file- Share logs and diagnostic data

The agent becomes your incident commander. Not deciding technical solutions, but making sure communication happens correctly and everyone has the information they need.

Customer Support That Actually Has Context

Most support chatbots suck because they don't know anything beyond a fixed knowledge base. A customer asks "what happened with my bug report from last month?" and the bot responds with generic troubleshooting steps.

With Slack MCP access, your AI agent can pull actual conversation history and provide real answers.

Practical example:

You have a #customer-support channel where requests come in (maybe piped from Intercom, email, or directly). When a customer asks about a previous issue, your agent:

- Uses

slack_list_messagesto search through recent channel history - Finds the original conversation about their bug report

- Extracts the ticket number that was referenced

- Checks the ticket status in your issue tracker (through another MCP server)

- Responds: "Your report about the Android crash (ticket #3847) was fixed in version 2.3.1, released on October 10th."

The customer gets a real answer in seconds. Your support team doesn't have to play detective through message history.

But here's the critical part: the agent knows when it's out of its depth. For questions that need human judgment, it uses slack_invite_user_to_channel to bring in a specialist, provides them with the full conversation context, and steps back.

One support team using this approach cut their first-response time by 60% while actually increasing customer satisfaction scores because the responses were more accurate and contextual than what stressed human agents were managing to provide during busy periods.

Essential tools:

slack_list_messages- Pull conversation historyslack_get_message- Retrieve specific messages for contextslack_send_message- Respond to customersslack_add_reaction- Slack reactions for signal processing status (👀 = reviewing, ✅ = handled)slack_invite_user_to_channel- Escalate to specialists when needed

Making Your Team's Knowledge Actually Accessible

Every company accumulates knowledge in Slack threads. Someone asks "how do we handle database migrations?" and a senior engineer writes a detailed answer. Three months later, someone asks the same question because they can't find that thread in 50,000 messages.

An AI agent with Slack MCP access becomes your institutional memory.

How this works:

You set up an agent monitoring #engineering-questions. When someone asks a question, the agent:

- Searches relevant channels (#devops, #backend, #database) using

slack_list_messages - Finds previous discussions about this topic

- Synthesizes the information

- Responds with the answer plus links to the original threads

"Based on previous discussions, here's our current approach: [synthesized answer]. This was covered in detail [here](link to March thread), with additional context about rollback procedures [here](link to July discussion)."

The person asking gets their answer immediately. The team avoids re-explaining the same thing repeatedly. And the agent learns your team's actual practices, not generic documentation.

You can take this further with async standup automation. Instead of everyone joining a call, team members post updates naturally throughout the day. The agent collects these, compiles a summary, and posts it each morning. Everyone gets visibility without scheduling overhead.

Key tools:

slack_list_messages- Search across channels for informationslack_send_message- Deliver synthesized answersslack_get_user_info- Identify subject matter experts for complex topicsslack_list_channels- Discover where different topics are discussed

This isn't revolutionary technology—it's just making the knowledge you already have actually findable.

What About Security and Permissions?

Three things to think about before you give an AI agent Slack access:

1. Permissions are inherited from the authorizing user

When someone authorizes your application, the AI agent gets their Slack permissions. It can only see channels they can see, and only perform actions they're allowed to perform. If you need broader access, use a service account with appropriate permissions.

2. Multi-tenant isolation matters

If you're building a product with multiple users, make sure each user's agent is isolated. Use the user_id parameter when creating MCP servers so User A's agent can't access User B's workspace. This is built into the Klavis platform but worth understanding.

3. Log everything

Every action your agent takes should be logged with user context, timestamp, and result. You need this for debugging (when something goes wrong) and compliance (when someone asks "who deleted that message?").

Most production deployments start with read-only agents for knowledge retrieval, then gradually enable write permissions as they gain confidence in the agent's behavior.

Frequently Asked Questions

Can I use this with GPT-4, or does it only work with Claude?

MCP is protocol-agnostic. Any LLM that supports function calling or tool use works fine—GPT-4, Claude, Llama, Mistral, whatever. The key is your application layer (whether you're using LangChain, LlamaIndex, or custom code) needs to speak MCP. The LLM just sees tool definitions and makes calls.

What happens if my agent screws up and posts something wrong?

Design for this. Start with read-only permissions if you're nervous. Implement approval workflows for high-impact actions like inviting users or archiving channels. Log everything so you can audit behavior. Many teams use a staging Slack workspace for testing before going to production. You can also use slack_update_message to correct errors and slack_add_reaction to signal uncertainty ("agent is 70% confident, please review").

How do I handle rate limits?

Slack enforces rate limits (typically 1 request per second per method). Production MCP servers handle this for you—they queue requests, implement backoff, and return clear errors if limits are hit. This is one of the big advantages over direct API integration where you have to build this yourself.

Ready to Build?

The gap between "impressive LLM demo" and "useful production tool" usually comes down to integration. Your model might be brilliant, but if it can't interact with the tools your team uses, it's just an expensive chatbot.

Slack MCP servers solve this for one of the most critical platforms in modern development. Whether you're automating incident response, building intelligent support systems, or making your team's knowledge accessible, giving your AI agent structured access to Slack changes what's possible.

The setup takes minutes. The impact is immediate. Teams report 3-4x faster response times, 40-50% reduction in repetitive work, and significantly better information access.

Check out Klavis AI's Slack MCP integration to get your AI agents connected to Slack—production-ready servers with built-in OAuth, all 18 tools, and APIs that work with any LLM. You can be testing in under 5 minutes.