The Short Version: Give Your AI Access to Your Live Data

Large Language Models (LLMs) are powerful reasoning engines, but they are isolated from the context of your actual business operations. To build truly intelligent, autonomous features, you must connect your AI to your application's source of truth. For a rapidly growing number of developers, that source of truth is Supabase, the open source Postgres-based backend.

This guide explores why bridging the gap between natural language and your database infrastructure is a paradigm shift for application development, and walks through a secure, scalable way to implement it today.

Why Connect an LLM? Moving Beyond the Dashboard

Integrating Supabase with an LLM isn't just about creating a novel chatbot; it fundamentally changes how your team and your end-users interact with data and infrastructure. It shifts operations from manual, context-heavy tasks performed in a dashboard or CLI to intent-based automation.

By giving an AI agent secure access to Supabase tools, you democratize data access and accelerate workflows.

Here is a comparison of the traditional workflow versus an AI-augmented approach:

| Aspect | Manual Supabase Workflow | AI-Assisted Workflow |

|---|---|---|

| Data Retrieval | Requires knowing SQL, understanding the specific schema, and manually executing queries. | Users ask questions in plain English. The AI handles schema discovery and SQL generation. |

| Accessibility | Limited to developers, DBAs, or users with access to BI tools. | Accessible to non-technical stakeholders (Support, Marketing, PMs) via chat interfaces. |

| Infrastructure Ops | Navigating the Supabase dashboard or using the CLI to branch, migrate, or manage settings. | Executed via natural language commands (e.g., "Create a staging branch for PR #42"). |

| Complex Workflows | Human must chain tasks together (e.g., query data -> format it -> call another API). | AI agents can autonomously chain Supabase tools with other API calls to complete complex goals. |

| Vector Search (RAG) | Requires manually generating embeddings, writing complex pgvector queries, and managing context. | The AI handles the entire Retrieval-Augmented Generation (RAG) pipeline dynamically based on user intent. |

Secure Integration in Minutes

To bridge this gap securely, you shouldn't paste connection strings into prompts. Instead, utilize the Model Context Protocol (MCP), a standard for connecting AI to external systems, similar to the tool-use approach adopted by leading model providers.

Using a managed provider like Klavis AI simplifies this dramatically. Through their unified Strata architecture, you get a production-ready Supabase MCP server that handles the heavy lifting of secure OAuth authentication and tool definition.

With just a few lines of Python, you can initialize a secure endpoint that grants your AI agent capability-based access to Supabase without ever exposing credentials. You can get started quickly using the SDK:

from klavis import Klavis

from klavis.types import McpServerName

# Initialize the client with your API key

klavis_client = Klavis(api_key="YOUR_API_KEY")

# Create a unified server that includes the Supabase integration

strata_server = klavis_client.mcp_server.create_strata_server(

servers=[McpServerName.SUPABASE],

user_id="app-user-12345" # A unique identifier for your end-user

)

# This URL is sent to your user to grant permission via OAuth

supabase_auth_url = strata_server.oauth_urls[McpServerName.SUPABASE]

print(f"Please authorize Supabase: {supabase_auth_url}")

# Once authorized, this single URL is all your AI agent needs to access tools

print(f"MCP Endpoint: {strata_server.strataServerUrl}")

Smarter Tool Use with Progressive Discovery

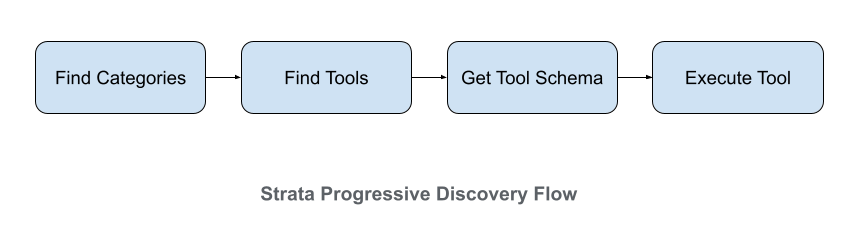

Why use a platform like Klavis? The answer lies in how the AI interacts with the tools. The common approach of exposing dozens of tools to an LLM at once often leads to confusion and poor performance.

Klavis's Strata server uses a "progressively discovery" design pattern. Instead of overwhelming the LLM with every single Supabase function, it first presents high-level categories like database, storage, and projects. Only after the AI selects a category does it reveal the specific tools within it. This guided, step-by-step process dramatically improves the AI's ability to successfully complete complex tasks by reducing noise and focusing its decision-making.

Putting it to Work: 4 Real-World Examples

Once connected, what can you actually build? Here are four practical applications that directly leverage the rich toolset available for Supabase.

1. The Autonomous Customer Support Agent

An AI agent can provide instant, context-aware support by securely accessing customer and project data.

- User: "I can't access my project dashboard. Can you check the status of my main project?"

- AI Agent's Workflow:

- The agent first identifies the user with

supabase_get_user. - It then calls

supabase_list_projectsto find all projects associated with that user. - Finally, it uses

supabase_get_project_healthon the relevant project to check its status.

- The agent first identifies the user with

- Result: The agent responds, "I see your project 'WebApp-Prod' is currently paused. Would you like me to restore it using the

supabase_restore_projecttool?"

2. The Natural Language Business Analyst

Empower your team to query data without writing SQL. An AI analyst can explore the database and generate insights on the fly.

- User: "How many new users from Germany signed up last month?"

- AI Agent's Workflow:

- The agent doesn't know the schema, so it first calls

supabase_list_tables. - Seeing a

userstable, it then callssupabase_list_columnsfor that table to discover the available fields. - With knowledge of the

countryandsigned_up_atcolumns, it constructs and runs a query withsupabase_execute_sqlto get the final count.

- The agent doesn't know the schema, so it first calls

- Result: The AI confidently answers, "There were 427 new users from Germany last month," having dynamically learned the schema to answer the question.

3. The AI-Powered DevOps Assistant

Automate your development and deployment pipeline by letting an AI agent manage your Supabase infrastructure.

- Developer: "Create a staging branch for pull request #117 and apply the latest schema migration."

- AI Agent's Workflow:

- The agent receives the request and calls

supabase_create_branchwith the namepr-117. - Once the branch is successfully created, it follows up by calling

supabase_apply_migrationto update the new branch's database schema. - As a final step, it could even call

supabase_generate_typescript_typesto create fresh types for the front-end team.

- The agent receives the request and calls

- Result: The agent reports back: "Branch

pr-117has been created, the latest migration is applied, and new TypeScript types are available. The staging environment is ready for testing."

4. The Smarter RAG Pipeline with Document Storage

Build a powerful Retrieval-Augmented Generation (RAG) system that combines vector search on metadata with full document retrieval from Supabase Storage.

- User: "Can you summarize the key findings from the Q3 2025 financial report?"

- AI Agent's Workflow:

- First, the agent uses

supabase_execute_sqlto perform a vector search on adocumentstable containing metadata and summaries. This query identifies the documentq3-financials-2025.pdf. - The SQL query returns the file's location in Supabase Storage.

- The agent then uses

supabase_download_storage_objectwith the correct bucket and file path to retrieve the full PDF. - Finally, it processes the document's contents to provide a detailed summary.

- First, the agent uses

- Result: The agent delivers a concise summary of the report, having seamlessly moved from a database query to a file storage operation.

FAQs

1. Is this approach secure? Yes. By using a managed MCP server with OAuth, you avoid handling long-lived database credentials directly within the LLM's prompt or your application code. The AI operates on a principle of least privilege, only accessing the specific tools and data permitted by the authenticated user's token.

2. Can I use this with Supabase's vector search (pgvector)? Absolutely. The supabase_execute_sql tool is your gateway to pgvector, which you can find on its GitHub repository. You can execute any valid PostgreSQL query, including vector similarity searches using operators like <=>. This makes it the ideal architecture for building advanced RAG applications on top of your existing Supabase data.

3. Do I need to manually define the database schema for the LLM? No. As shown in the Business Analyst example, a key advantage is using tools like supabase_list_tables and supabase_list_columns. This allows the AI agent to dynamically explore and understand your database schema before constructing a query, which dramatically reduces errors and hallucinations.