TL;DR

This article show you why you should integrate large language models (LLMs) directly with your GitHub workflow using the Model Context Protocol (MCP), and how to do that by using Klavis Strata MCP. And we will demonstrate 3 real use cases with Klavis Strata MCP that can help you automate common GitHub tasks like issue management, code review, and CI/CD monitoring.

The Silent Productivity Killer Hiding in Your Browser Tabs

Manually managing GitHub repositories can quickly become a significant burden, especially as projects and teams scale in size and complexity. The repetitive nature of tasks such as creating repositories, managing user permissions, and ensuring consistent branch protection policies across multiple projects consumes valuable developer time. This manual overhead not only slows down development workflows but also increases the risk of human error, leading to inconsistent configurations and potential security vulnerabilities.

Let's quantify this. Imagine the manual steps involved in common GitHub tasks and compare them to a world where an LLM assistant handles the logistics.

| Task | Manual Management (The "Before") | LLM-Powered Automation (The "After") | Estimated Time Saved (Per Week) |

|---|---|---|---|

| Pull Request Review | Open GitHub, find the repo, click on "Pull requests," filter or search for the right one, read through files, cross-reference with related issues in another tab. | In your IDE or terminal: "Summarize the changes in the latest PR from the 'feature-auth' branch and tell me if it resolves issue #112." | 2-3 hours |

| Issue Triage & Management | Navigate to the "Issues" tab, use filters, read comment threads to understand the context, manually assign labels, assign a developer, and add to a project board. | "Find all open bugs with the 'critical' label that haven't been updated in a week. Assign them to the on-call engineer and post a summary in the #dev-ops Slack channel." | 1-2 hours |

| Project & Repo Scaffolding | Go to GitHub, click "New repository," choose a template (if you remember which one), clone the repo, create initial branches, set up branch protection rules, and invite collaborators. | "Create a new repository named 'nova-service' from our standard Python template. Set up a 'dev' branch, protect 'main' to require at least one approval, and grant the backend team write access." | 30-60 minutes |

| Code & Knowledge Discovery | Manually browse directories, use GitHub's search bar with specific keywords, and piece together how a function is used across the codebase by opening multiple files. | "Show me where the calculate_user_metrics function is defined in the 'analytics' service and provide three examples of how it's used elsewhere in the mono-repo." | 2-3 hours |

The Right Way to Connect Your LLM to GitHub

So, how do you actually give an LLM the keys to your GitHub kingdom? A naive approach might be to just feed raw API documentation into the model's context window. This is a recipe for disaster. The official GitHub REST API is massive, with thousands of possible endpoints and variations. Presenting all of this at once is like trying to teach someone a language by handing them a dictionary. The model will be overwhelmed, leading to high error rates, slow response times, and incorrect actions—a phenomenon often called "tool overload."

This is precisely the problem the Model Context Protocol (MCP) was created to solve. MCP is an open standard that acts as a structured, secure bridge between an LLM and any external tool. Think of it as a universal translator and a smart guide rolled into one. It allows the tool (like a GitHub server) to tell the LLM what it can do in a way the model can understand, without flooding it with irrelevant details.

Overcome Context Overload

But even with a standard like MCP, the "tool overload" problem can persist if the tool itself is incredibly complex. This is where a more intelligent implementation becomes critical. Klavis Strata provides a unified MCP server that introduces a brilliant layer of abstraction: progressive discovery.

Instead of exposing the entire GitHub API surface at once, Strata guides the LLM through a logical decision tree.

- It starts with high-level intent: The LLM first figures out what the user wants to do (e.g., "manage a pull request").

- It navigates to the right category: Strata then presents a limited set of relevant tool categories (e.g., "list PRs," "comment on a PR," "merge a PR").

- It reveals the specific action: Only when the LLM has drilled down to a specific action does Strata expose the final API details needed for execution.

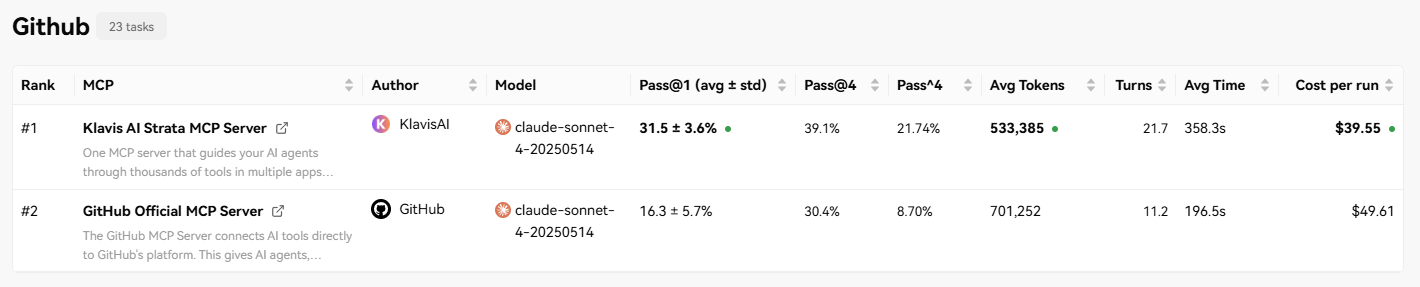

This guided approach is far more efficient and reliable. The performance data speaks for itself. In head-to-head benchmarks, the Klavis Strata-powered GitHub MCP server demonstrated a +15.2% higher pass@1 rate compared to the official GitHub MCP server.

How to Get Started

Integrating this into your own AI application is surprisingly simple. You can self-host the open-source server or use a managed service for a zero-setup experience. Here’s a quick start guide.

Option 1: Use API to Create a Strata MCP Server

You can create a Strata MCP server by using the Klavis SDK. Here’s a sample code snippet in Python:

from klavis import Klavis, McpServerName

# Initialize the Klavis client with your secure API key

klavis = Klavis(api_key="your-klavis-api-key")

# Create a secure, multi-tenant MCP server connection for a specific user.

# This ensures all actions are performed with the user's permissions.

strata_server = klavis.mcp_server.create_strata_server(

user_id="github-user-123",

servers=[McpServerName.GITHUB] # You can combine multiple tools here, like GitHub and Jira

)

# Your LLM application now has a secure endpoint (strata_server.url)

# to interact with GitHub based on natural language prompts.

print(f"Successfully connected to Strata server at: {strata_server.url}")

Option 2: Use API to Create a Strata MCP Server

Or you can just use UI to get the MCP set up in a few clicks. Just find Search "Github" in Klavis dashboard and follow the steps to connect your GitHub account securely via OAuth. Once connected, you can generate an MCP endpoint that your LLM can use to interact with GitHub seamlessly.

For a deeper dive, the Klavis GitHub MCP documentation provides comprehensive guides and examples.

Unlocking AI-Powered Workflows: 3 Powerful Use Cases for the GitHub MCP Server

The GitHub Model Context Protocol (MCP) Server is transforming the way developers interact with their repositories by connecting AI tools directly to the GitHub platform. This allows for natural language interactions to manage repositories, automate workflows, and analyze code. Here are three practical use cases that demonstrate how the GitHub MCP Server can streamline your development process.

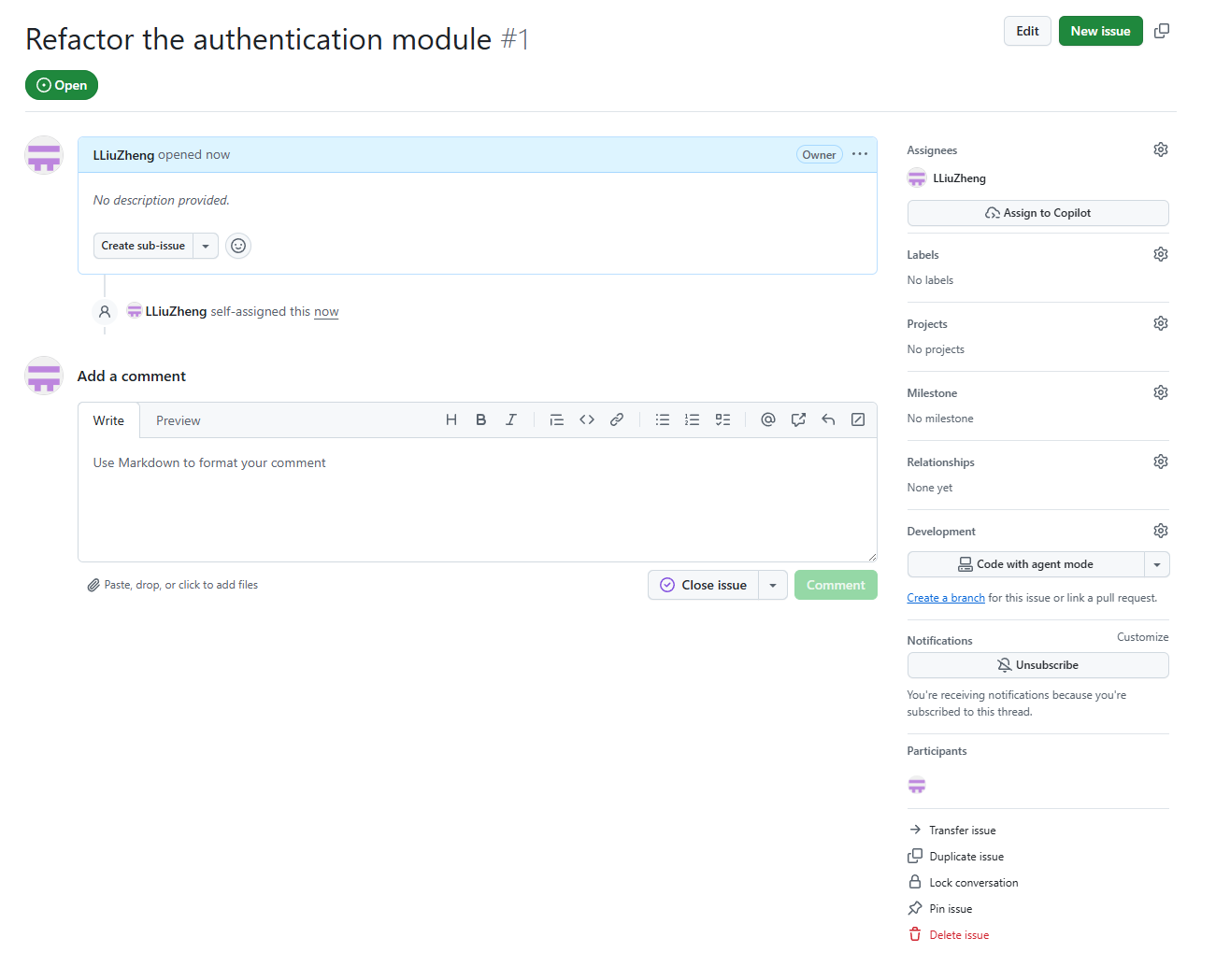

1. Automated Issue and Pull Request Management

Tired of manually creating issues for new bugs or feature requests? The GitHub MCP Server can automate this process, allowing you to create and assign issues directly from your chat-based AI assistant. This is particularly useful for quickly capturing thoughts and tasks without switching context.

Prompt:

Create a new issue in the 'test-repo' repository titled "Refactor the authentication module" and assign it to myself.

Result:

We can see the issue has been created successfully in the repository with the specified title and assignee.

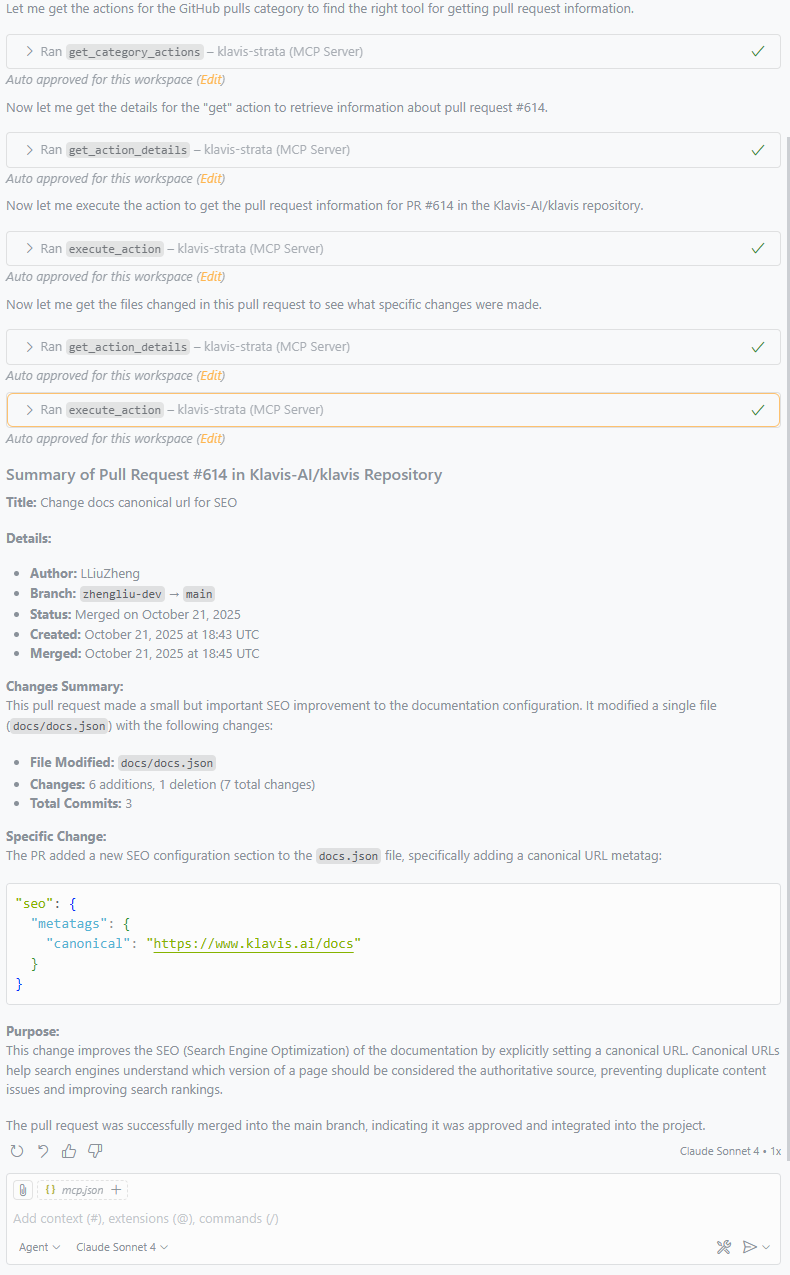

2. Streamlining Code Review with AI-Powered Summaries

Code review is a critical part of the development lifecycle, but it can be time-consuming to get up to speed on the changes in a large pull request. The GitHub MCP Server can provide a concise summary of the changes in a pull request, helping reviewers quickly understand the scope and purpose of the proposed modifications.

Prompt:

Summarize the changes in pull request #614 in the 'Klavis-AI/klavis' repository.

Result:

We can see a detailed summary of the changes made in the pull request, highlighting key modifications.

We can see the model is executing the task guided by Strata MCP progressive discovery method

We can see the model is executing the task guided by Strata MCP progressive discovery method

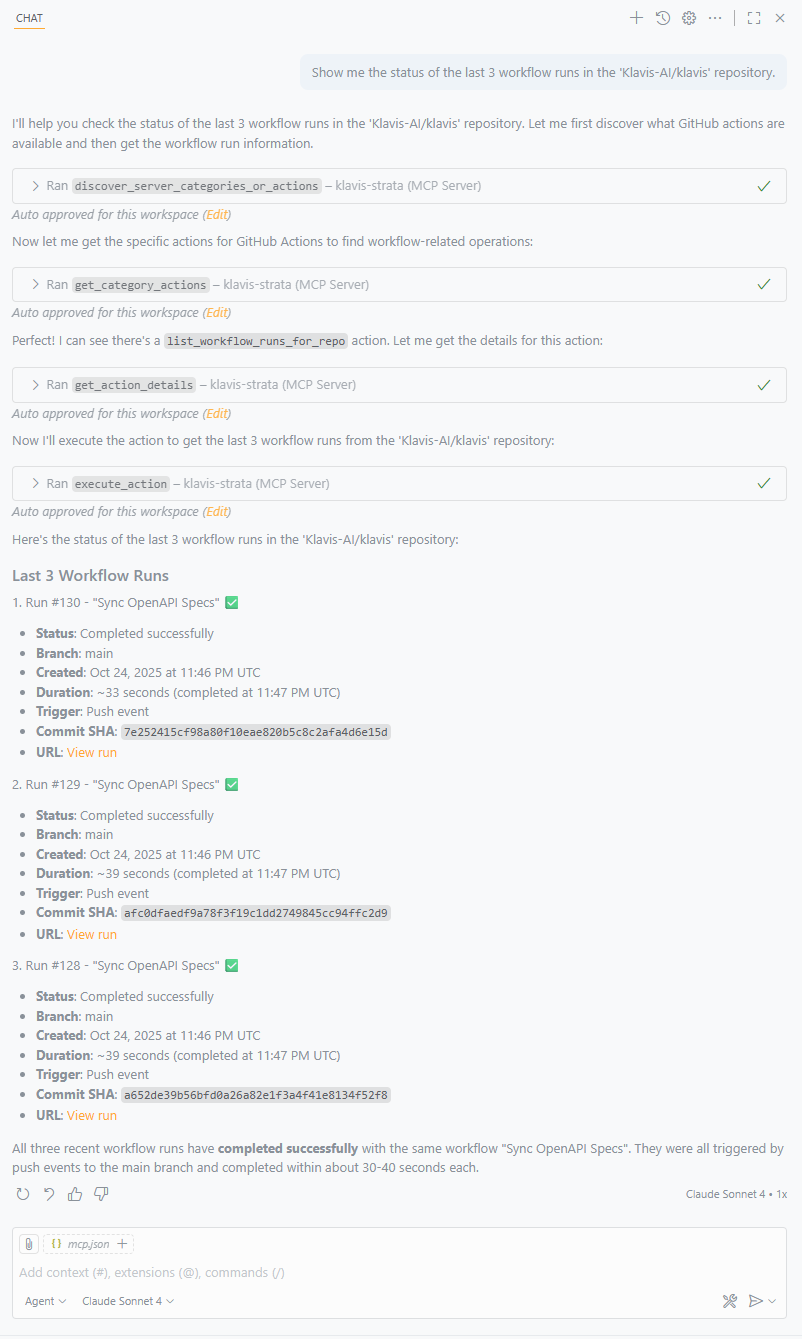

3. Gaining Insights into CI/CD Pipelines

Understanding the status of your continuous integration and deployment pipelines is crucial for maintaining a healthy workflow. Instead of navigating through the GitHub Actions interface, you can use the GitHub MCP Server to quickly check the status of recent workflow runs and identify any failures.

Prompt:

Show me the status of the last 3 workflow runs in the 'Klavis-AI/klavis' repository.

Result:

We can see the status of the last three workflow runs, including a link to the details of each run.

More Complex Workflows

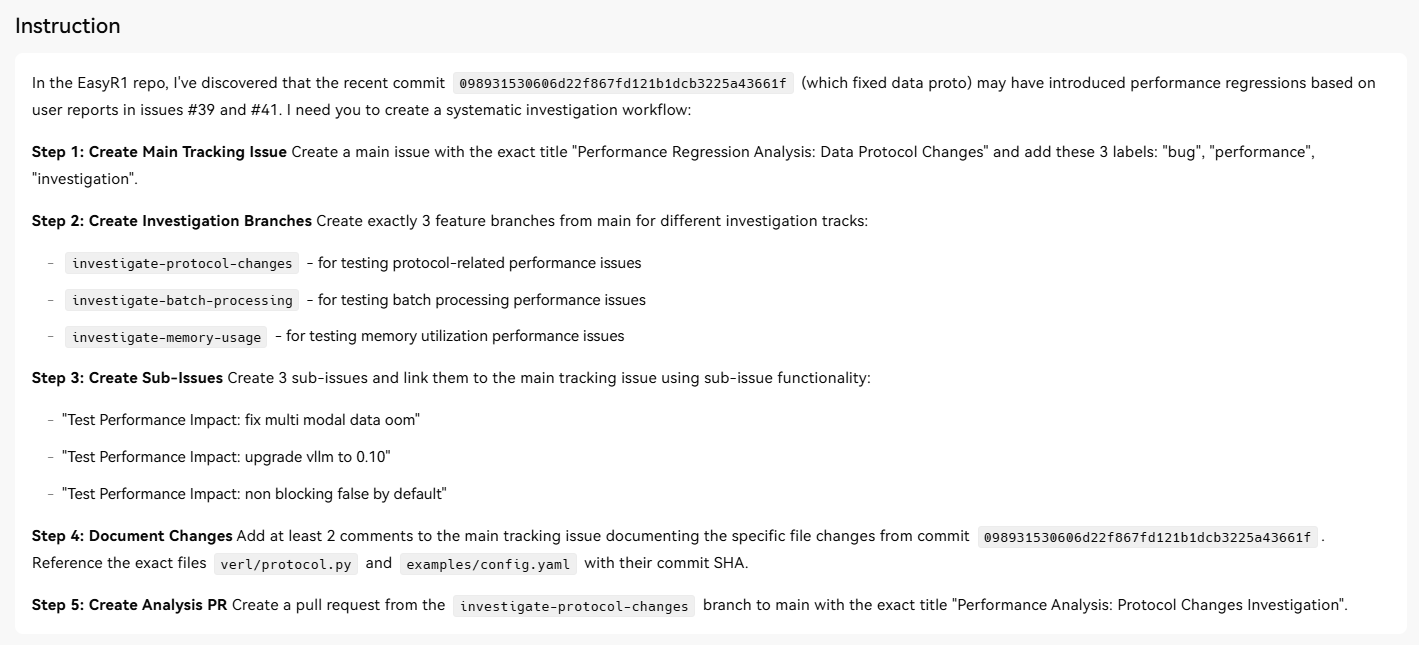

We've shown some simple examples here, but the real power of the GitHub MCP Server comes from its ability to handle more complex workflows. With the guide of Klavis Strata MCP, model can finish a complex task like this:

A task from MCPMark successfully completed by Klavis Strata MCP

A task from MCPMark successfully completed by Klavis Strata MCP

See more complex tasks provided by MCPmark: MCPMark Github Tasks. And we've benchmarked that on these benchmark, the Klavis Strata MCP server even outperforms the official GitHub MCP server in terms of accuracy and token usage.

Conclusion: Reclaim Your Focus

Integrating LLMs with your development tools via a robust framework like MCP is not just another incremental improvement. It represents a paradigm shift in how we interact with the entire software development lifecycle. The goal is to elevate the developer experience by abstracting away the tedious, repetitive, and distracting tasks that consume our time and energy.

By using an intelligent intermediary like Klavis Strata, which solves the critical "tool overload" problem, you can create an AI assistant that is not only powerful but also reliable. This allows you to stay in a state of deep focus for longer, delegate the administrative burden, and dedicate your cognitive firepower to what truly matters: solving hard problems and building exceptional software. It's time to close the endless browser tabs and start a more productive conversation with your code.

Frequently Asked Questions (FAQs)

1. Is it secure to give an LLM access to my private GitHub repositories? This is a critical concern, and the answer lies in the architecture. A well-designed system like Klavis AI's hosted MCP servers doesn't give the LLM carte blanche access. It uses per-user, authenticated sessions via OAuth 2.0. This means the AI agent inherits the exact permissions of the authenticated user. It cannot see a repository or perform an action that the user themselves isn't authorized to. All interactions are securely scoped, logged, and respect GitHub's native access control policies.

2. How is this different from tools like GitHub Copilot? They are complementary, not competitive. GitHub Copilot is a phenomenal code completion tool that lives inside your IDE. Its primary function is to help you write code faster. An MCP-connected LLM is a workflow automation agent. Its function is to manage the ecosystem around your code—handling PRs, issues, repository actions, and project management. You'd use Copilot to write a new feature, and then use the MCP agent to create the PR, request reviews, and merge it—all with natural language.

3. What LLMs can I use with this kind of integration? The Model Context Protocol is model-agnostic. You can use any capable large language model, whether it's from OpenAI (GPT-4, etc.), Anthropic (Claude 3), Google (Gemini), or even open-source models. The MCP server standardizes the communication, so as long as your chosen LLM is good at reasoning and following instructions (function calling), it can be plugged into the workflow.

4. How much technical overhead is there to get started? The overhead can range from minimal to moderate, depending on your approach. Using a managed, hosted service like Klavis AI is the fastest route, often taking just a few minutes to set up an endpoint via a web UI or an SDK. For teams that require more control, self-hosting the open-source MCP servers is also an option, which involves a standard container deployment process. The key benefit is that you don't need to become an expert in the GitHub API itself; the MCP server handles that complexity for you.